Graph Attention Networks (GAT)

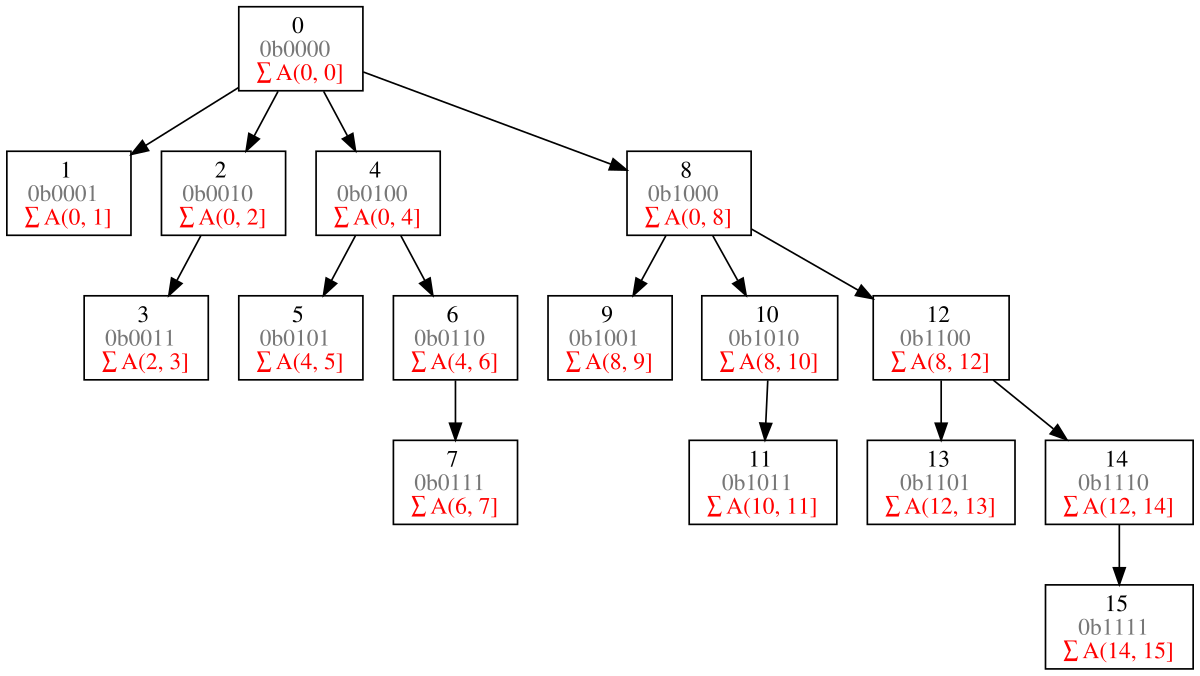

GATs work on graph data. A graph consists of nodes and edges connecting nodes. For example, in Cora dataset the nodes are research papers and the edges are citations that connect the papers.

GAT uses masked self-attention, kind of similar to transformers. GAT consists of graph attention layers stacked on top of each other. Each graph attention layer gets node embeddings as inputs and outputs transformed embeddings. The node embeddings pay attention to the embeddings of other nodes it’s connected to. The details of graph attention layers are included alongside the implementation.

It takes , where $\overrightarrow{h_i} \in \mathbb{R}^F$ as input and outputs , where $\overrightarrow{h’_i} \in \mathbb{R}^{F’}$.

Adjacency matrix represent the edges (or connections) among nodes. adj_mat[i][j] is True if there is an edge from node i to node j.

$a$ is the attention mechanism, that calculates the attention score. The paper concatenates $\overrightarrow{g_i}$, $\overrightarrow{g_j}$ and does a linear transformation with a weight vector $\mathbf{a} \in \mathbb{R}^{2 F’}$ followed by a $\text{LeakyReLU}$.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25416391/STK473_NET_NEUTRALITY_CVIRGINIA_C.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25415669/1238020424.jpg)