Pay Attention to MLPs (gMLP)

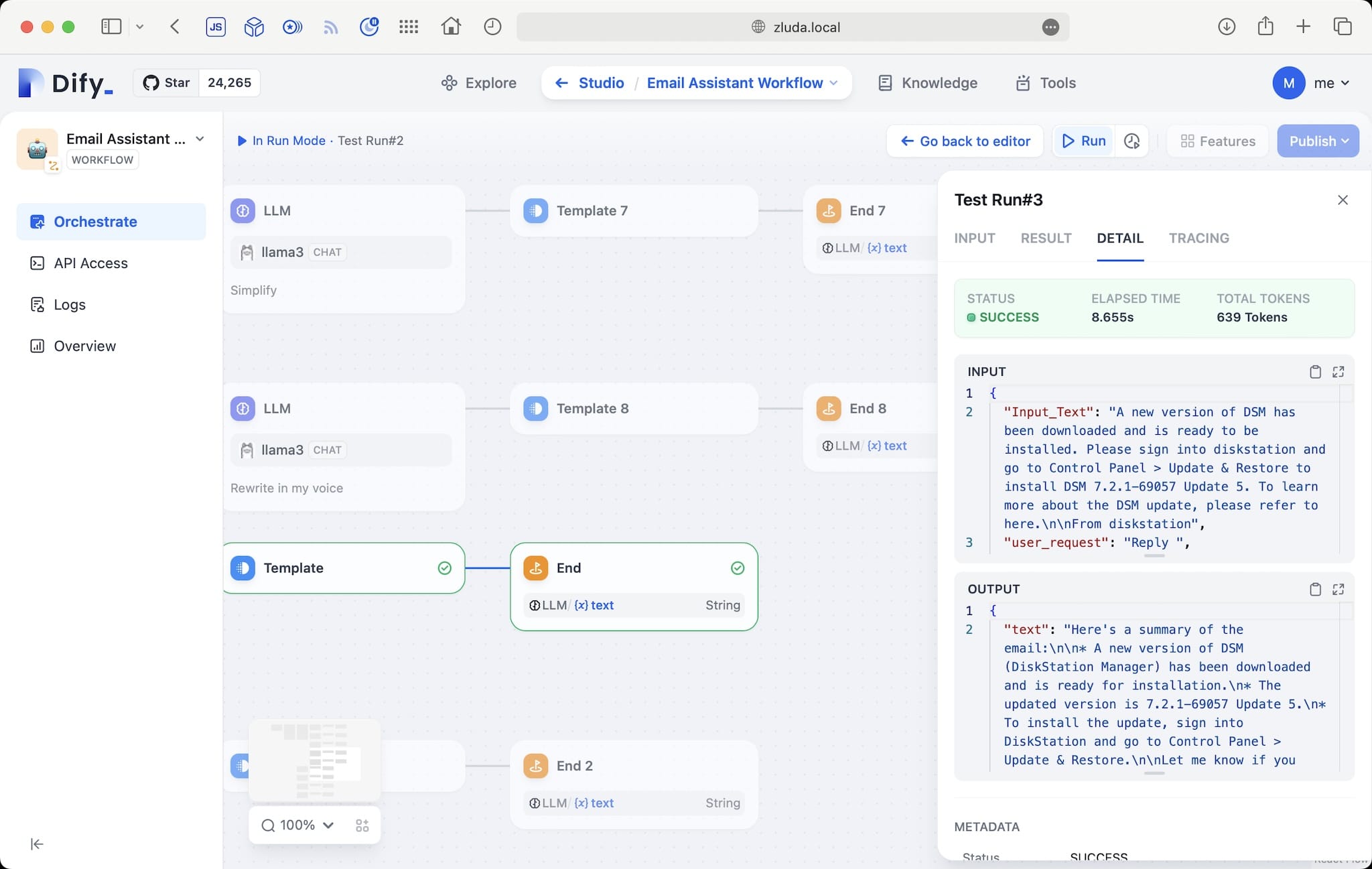

This paper introduces a Multilayer Perceptron (MLP) based architecture with gating, which they name gMLP. It consists of a stack of $L$ gMLP blocks.

Each block does the following transformations to input embeddings $X \in \mathbb{R}^{n \times d}$ where $n$ is the sequence length and $d$ is the dimensionality of the embeddings:

where $V$ and $U$ are learnable projection weights. $s(\cdot)$ is the Spacial Gating Unit defined below. Output dimensionality of $s(\cdot)$ will be half of $Z$. $\sigma$ is an activation function such as GeLU.

d_model is the dimensionality ($d$) of $X$ d_ffn is the dimensionality of $Z$ seq_len is the length of the token sequence ($n$)

Embedding size (required by Encoder. We use the encoder module from transformer architecture and plug gMLP block as a replacement for the Transformer Layer.

where $f_{W,b}(Z) = W Z + b$ is a linear transformation along the sequence dimension, and $\odot$ is element-wise multiplication. $Z$ is split into to parts of equal size $Z_1$ and $Z_2$ along the channel dimension (embedding dimension).