Optimizing LLM Prompts Using Nomadic Platform's Reinforcement Learning Framework

submited by

Style Pass

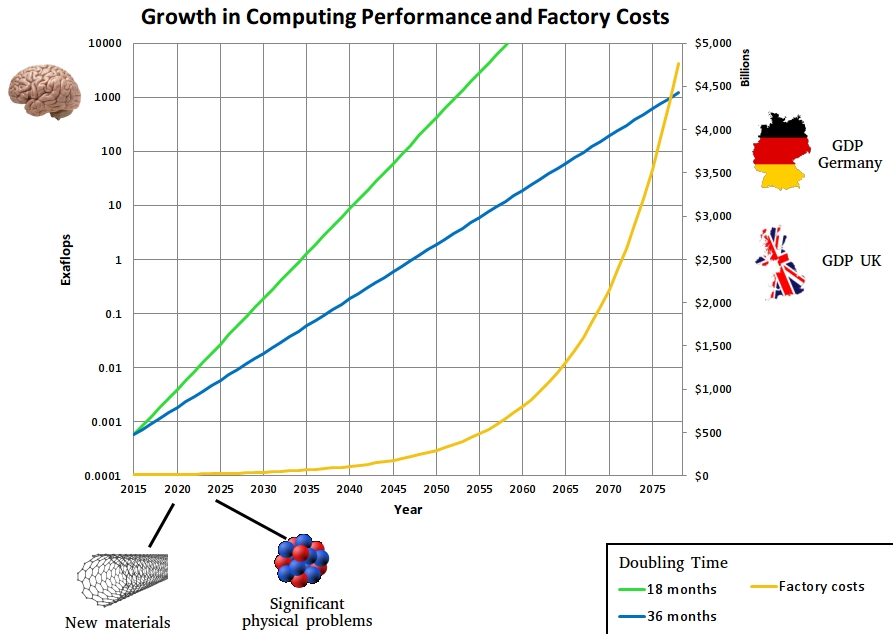

The RL Prompt Optimizer employs a reinforcement learning framework to iteratively improve prompts used for language model evaluations. At each episode, the agent selects an action to modify the current prompt based on the state representation, which encodes features of the prompt. The agent receives rewards based on a multi-metric evaluation of the model's responses, encouraging the development of prompts that elicit high-quality answers.

The following interactive visualizations illustrate various aspects of the RL prompt optimization process:

Read more nomadic-ml.g...

Leave a Comment

Related Posts