Akshay Ballal: Machine Learning Enthusiast

In this post, we’ll demonstrate how to create a function-calling agent using Small Language Models (SLMs). Leveraging SLMs offers a range of benefits, especially when paired with tools like LoRA adapters for efficient fine-tuning and execution. While Large Language Models (LLMs) are powerful, they can be resource-intensive and slow. On the other hand, SLMs are more lightweight, making them ideal for environments with limited hardware resources or specific use cases where lower latency is critical.

By using SLMs with LoRA adapters, we can separate reasoning and function execution tasks to optimize performance. For instance, the model can execute complex function calls using the adapter and handle reasoning or thinking tasks without it, thus conserving memory and improving speed. This flexibility is perfect for building applications like function-calling agents without needing the infrastructure required for larger models.

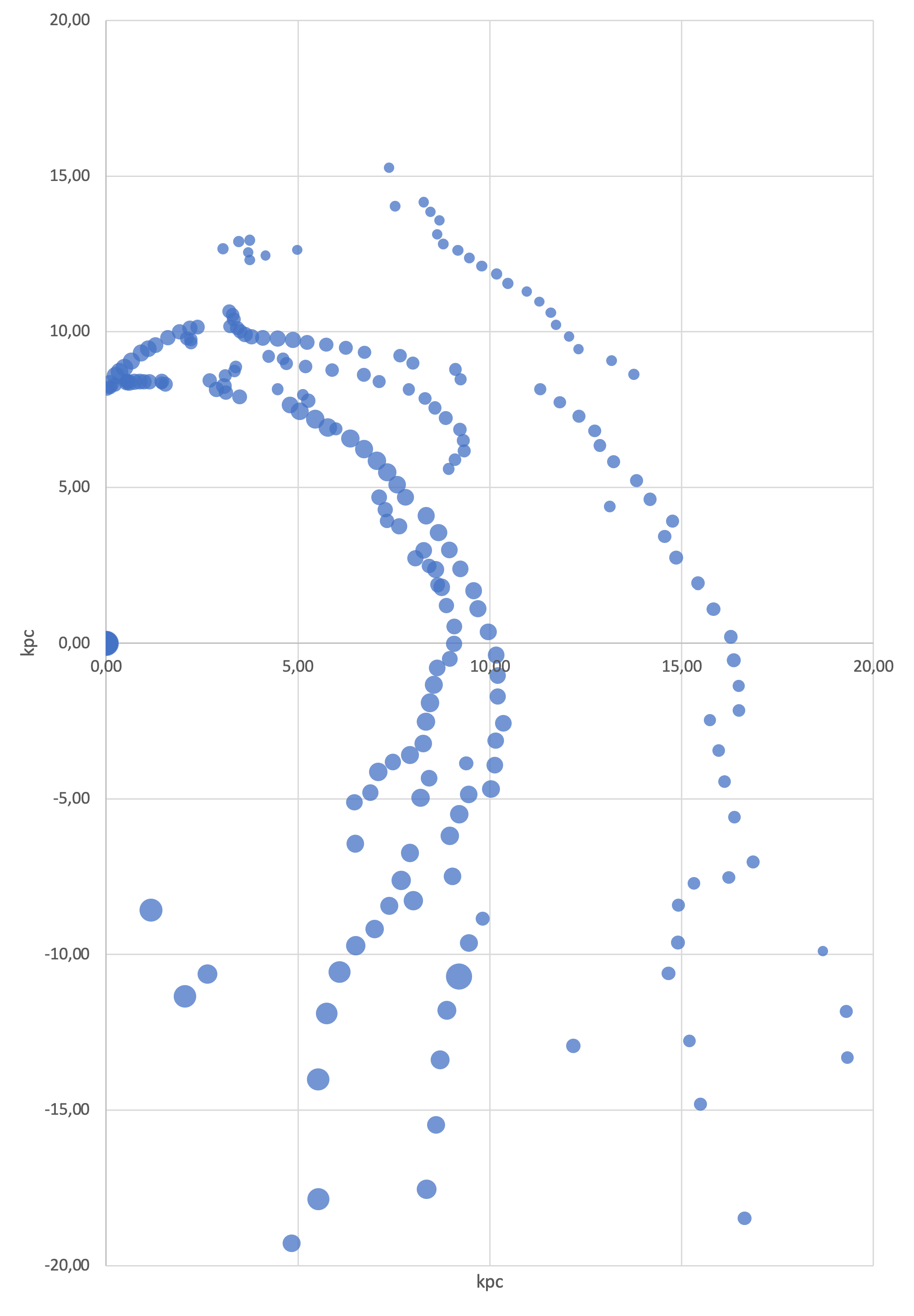

Moreover, SLMs can be easily scaled to run on devices with limited computational power, making them ideal for production environments where cost and efficiency are prioritized. In this example, we’ll use a custom model trained on the Salesforce/xlam-function-calling-60k dataset via Unsloth, demonstrating how you can utilize SLMs to create high-performance, low-resource AI applications.