AI’s computing gap: academics lack access to powerful chips needed for research

You can also search for this author in PubMed Google Scholar

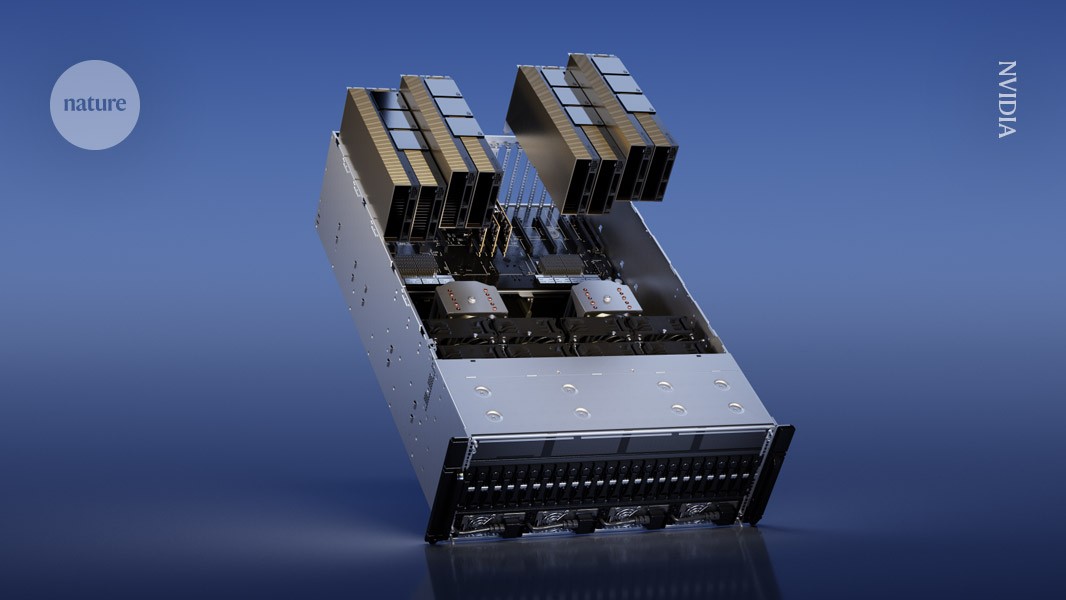

Tech giant NVIDIA’s H100 graphics-processing unit is a sought after chip for artificial-intelligence research. Credit: NVIDIA

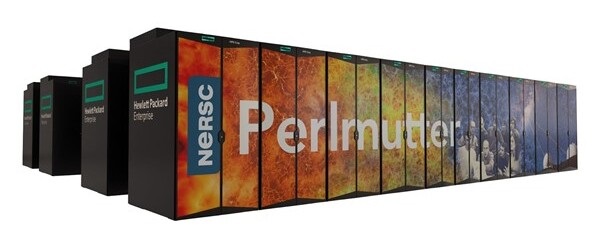

Many university scientists are frustrated by the limited amount of computing power available to them for research into artificial intelligence (AI), according to a survey of academics at dozens of institutions worldwide.

The findings1, posted to the preprint server arXiv on 30 October, suggest that academics lack access to the most advanced computing systems. This can hinder their ability to develop large language models (LLMs) and do other AI research.

In particular, academic researchers sometimes don’t have the resources to obtain powerful enough graphics processing units (GPUs) — computer chips commonly used to train AI models that can cost thousands of dollars. By contrast, researchers at large technology companies have higher budgets and can spend more on GPUs. “Every GPU adds more power,” says study co-author Apoorv Khandelwal, a computer scientist at Brown University in Providence, Rhode Island. “While those industry giants might have thousands of GPUs, academics maybe only have a few.”

“The gap between academic and industry models is huge, but it could be a lot smaller,” says Stella Biderman, executive director at EleutherAI, a non-profit AI research institute in Washington DC. Research into this disparity is “super important”, she says.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25745281/nasa_blue_origin_lander.png)