On-device query intent prediction with lightweight LLMs to support ubiquitous conversations

Scientific Reports volume 14, Article number: 12731 (2024 ) Cite this article

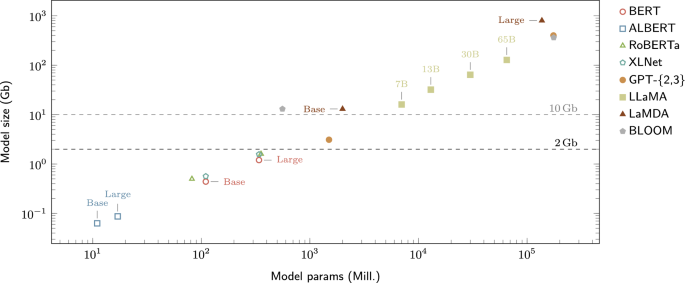

Conversational Agents (CAs) have made their way to providing interactive assistance to users. However, the current dialogue modelling techniques for CAs are predominantly based on hard-coded rules and rigid interaction flows, which negatively affects their flexibility and scalability. Large Language Models (LLMs) can be used as an alternative, but unfortunately they do not always provide good levels of privacy protection for end-users since most of them are running on cloud services. To address these problems, we leverage the potential of transfer learning and study how to best fine-tune lightweight pre-trained LLMs to predict the intent of user queries. Importantly, our LLMs allow for on-device deployment, making them suitable for personalised, ubiquitous, and privacy-preserving scenarios. Our experiments suggest that RoBERTa and XLNet offer the best trade-off considering these constraints. We also show that, after fine-tuning, these models perform on par with ChatGPT. We also discuss the implications of this research for relevant stakeholders, including researchers and practitioners. Taken together, this paper provides insights into LLM suitability for on-device CAs and highlights the middle ground between LLM performance and memory footprint while also considering privacy implications.

When using cloud-based communication platforms, users often lose control over their privacy, as their data is processed by (and ends up being stored on) third-party servers, which may also be used for further training by service providers. Moreover, as indicated by prior work, users’ privacy intentions are often not in sync with their behaviour, which may lead to users unwittingly disclosing sensitive information1,2. This issue is pertinent when it comes to interaction with systems that are designed to mimic human-like interaction such as Conversational Agents (CAs)3,4, especially on mobile devices that can be considered as ‘intimate’ objects that users rarely part with5.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25822592/STK169_ZUCKERBERG_MAGA_STKS491_CVIRGINIA_D.jpg)