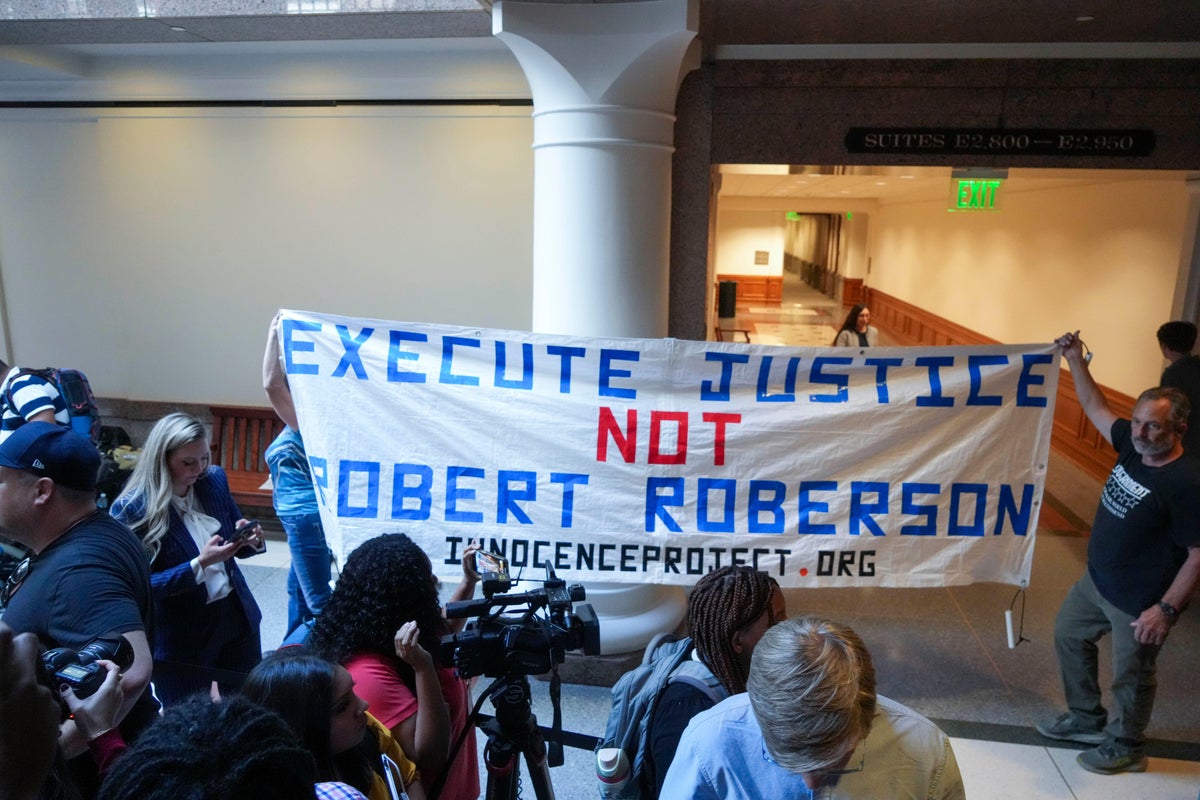

AI models fall for the same scams that we do

Large language models can be used to scam humans, but AI is also susceptible to being scammed – and some models are more gullible than others

The large language models (LLMs) that power chatbots are increasingly being used in attempts to scam humans – but they are susceptible to being scammed themselves.

Udari Madhushani Sehwag at JP Morgan AI Research and her colleagues peppered three models behind popular chatbots – OpenAI’s GPT-3.5 and GPT-4, as well as Meta’s Llama 2 – with 37 scam scenarios.

The chatbots were told, for instance, that they had received an email recommending investing in a new cryptocurrency, with…

Receive a weekly dose of discovery in your inbox! We'll also keep you up to date with New Scientist events and special offers.