HPC Market Bigger Than Expected, And Growing Faster

As far as we have been concerned since founding The Next Platform literally a decade ago this week, AI training and inference in the datacenter are a kind of HPC.

They are very different in some ways. HPC takes a tiny dataset and blows it up into a massive simulation like a weather or climate forecast that you can see, and AI takes a mountain of data about the world and grinds it down to a model you can pour new data through to make it answer questions. HPC and AI have different compute, memory, and bandwidth needs at different stages of their applications. But ultimately, HPC and AI training are trying to make a lot of computers behave like one to do a big job that is impossible to any other way.

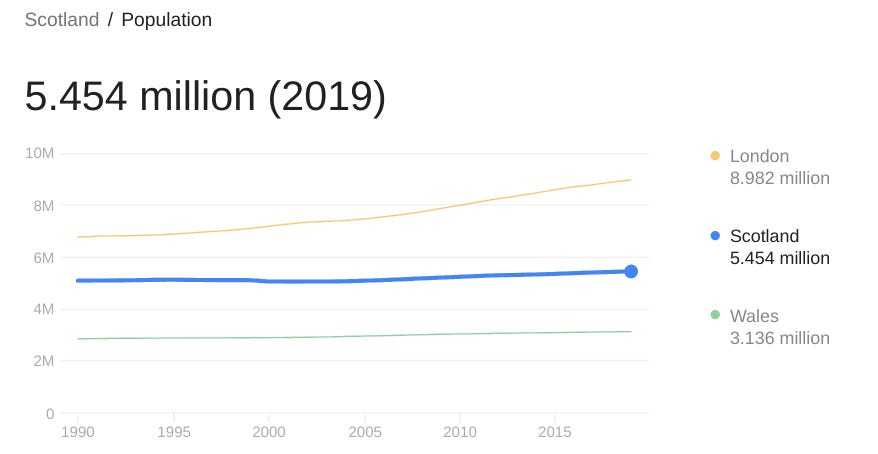

If this is true, then we need to add the money being spent on traditional ModSim platforms to the money being spent for GenAI and traditional AI training and inference in the datacenter to get the “real” HPC numbers. This is what Hyperion Research has been trying to do for a few years, and it has just done a substantial revision on its HPC server spending market to take into account the large number of AI servers that are being sold to customers by Nvidia, Supermicro, and others who it has not counted in the past.

Seating was a little tight at the traditional Tuesday morning briefing by Hyperion that is given at the SC supercomputing conference, so we were a little close to the projection screens and hence at a very tight angle that makes the charts a little hard to read. Just click to enlarge them and they will be easier to read.