Exploring parsing APIs: adding a lexer

In this post we’ll add a lexer (or “tokenizer”), with two APIs, and for each lexer API see how the parsers from the previous post perform when combined with a lexer.

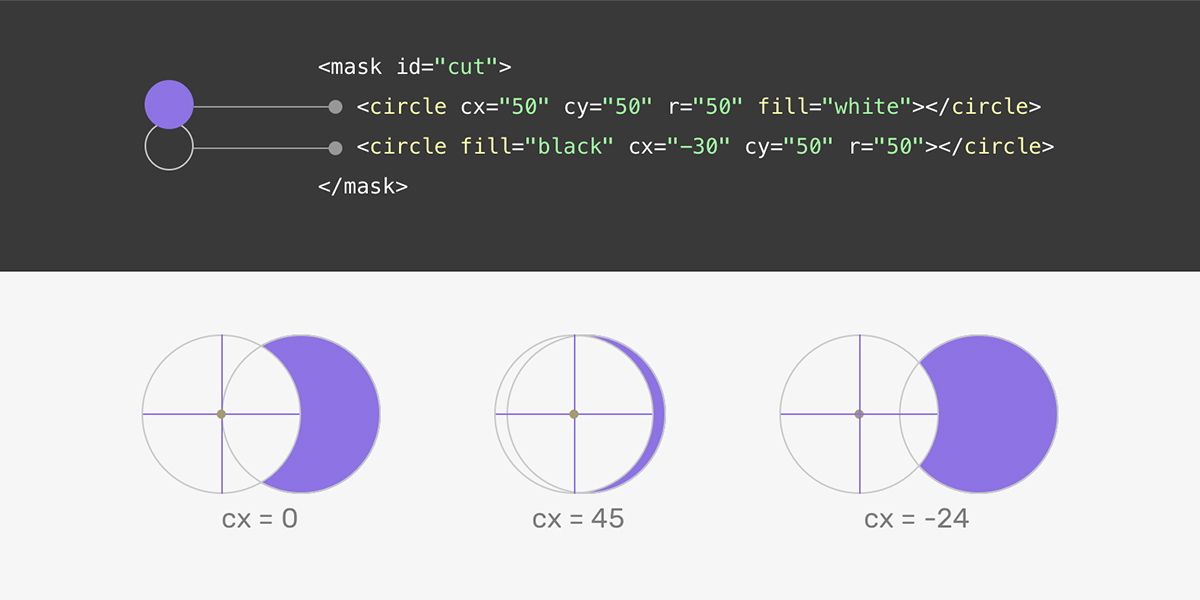

What is a lexer? A lexer is very similar to the event parsers we saw in the previous post, but it doesn’t try to maintain any structure. It generates “tokens”, which are parts of the program that cannot be split into smaller parts. A lexer doesn’t care about parentheses or other delimiters being balanced, or that values in an array are separated by commas, or anything else. It simply splits the input into tokens.

Why is a lexer useful? If you already have an event parser, adding a lexer may not allow a lot of new use cases. The main use cases that I’m aware of are:

Syntax highlighting: when higlighting syntax we don’t care about the tree structure, we care about keywords, punctuation (list separators, dots in paths etc.), delimiters (commas, bracets, brackets), and literals. A lexer gives us exactly these and nothing else.

/cloudfront-us-east-2.images.arcpublishing.com/reuters/3GGMDZNLGJJ5LL7CDDICODBZ3U.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25762718/k_066_img11.jpeg)

:max_bytes(150000):strip_icc()/How-Electro-Agriculture-and-Growing-Food-in-the-Dark-Could-Change-the-Way-You-Eat-Forever-FT-BLOG1124-02-db04f010b123471a80762723842bf5bd.jpg)