Best Questions and Answers to the “Terrifying Netflix Concurrency Bug” Post

Hello friends. A few months ago, I wrote a post about a terrifying concurrency bug we experienced during my time at Netflix.

It was Friday and the owning team couldn’t have a proper fix ready until Monday at the earliest, and we weren’t able to roll back easily.

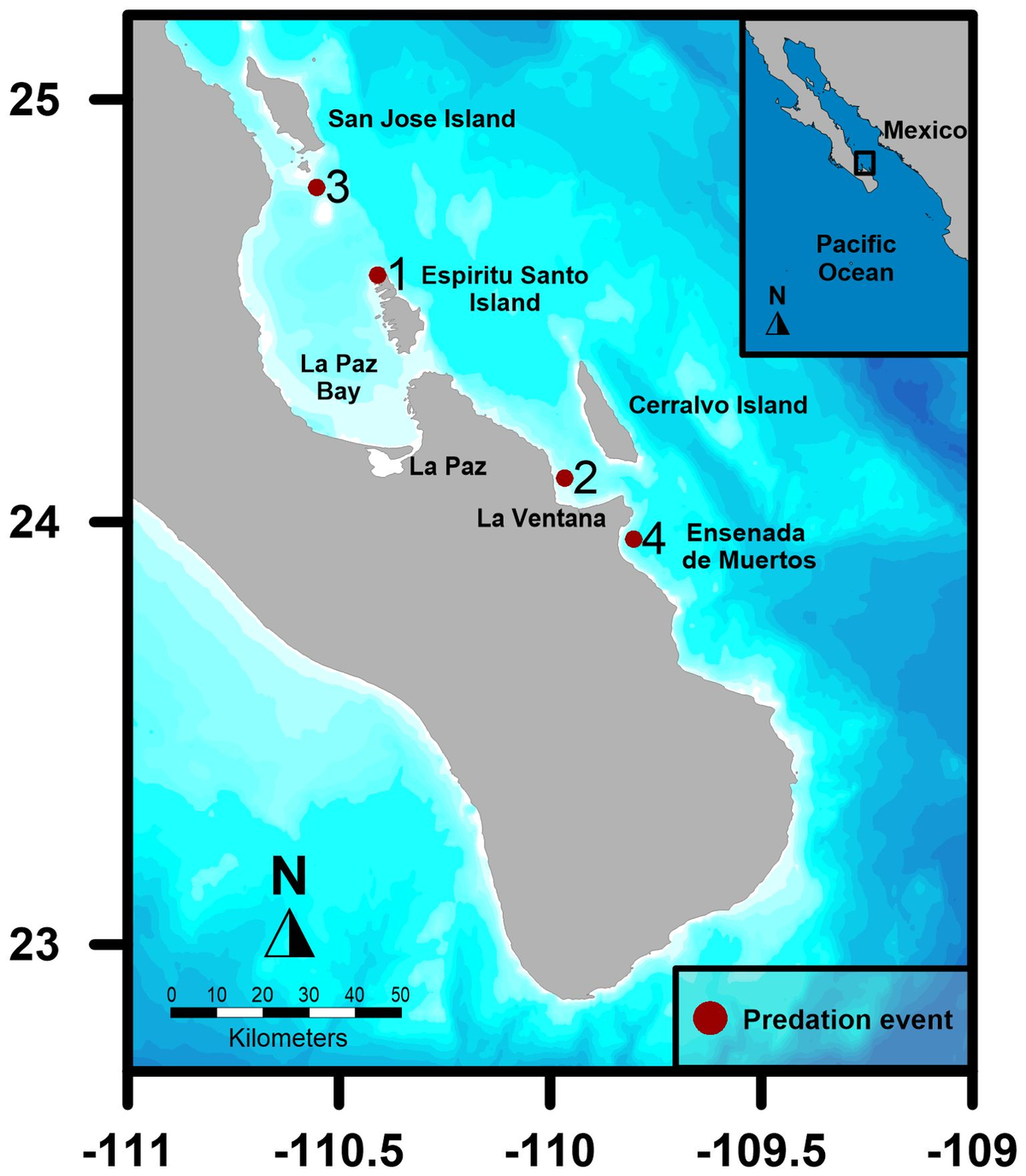

As a fix, we scaled our cluster to max capacity, shut off auto-scaling, and quasi-randomly terminated a few instances per hour in order to regain fresh capacity.

The post was recently picked up by Hacker News and a few other aggregators which brought in an unexpected but appreciated surge of traffic.

There were some interesting questions in the comment threads, so I thought I’d share them here, along with my responses, which were hidden by Hacker News because I have no karma.

There’s something about the Chaos Monkey that often gets lost — it was a cool, funny, and useful idea, but not particularly impactful when running a stateless cluster of 1000+ nodes.

On any given day, we were already auto-terminating a non-zero number of “bad” nodes for reasons we didn’t fully understand — maybe it was bad hardware, a noisy neighbor, or a handful of other things.