Memory in Agents, Make LLMs remember.

Imagine hiring a brilliant co-worker. They can reason, write, and research with incredible skill. But there’s a catch: every day, they forget everything they ever did, learned or said. This is the reality of most Agents today. They are powerful but are inherently stateless. We are making significant progress in reasoning and tool use, but the ability for Agents to remember past interactions, preferences, and learned skills remains heavily under explored.

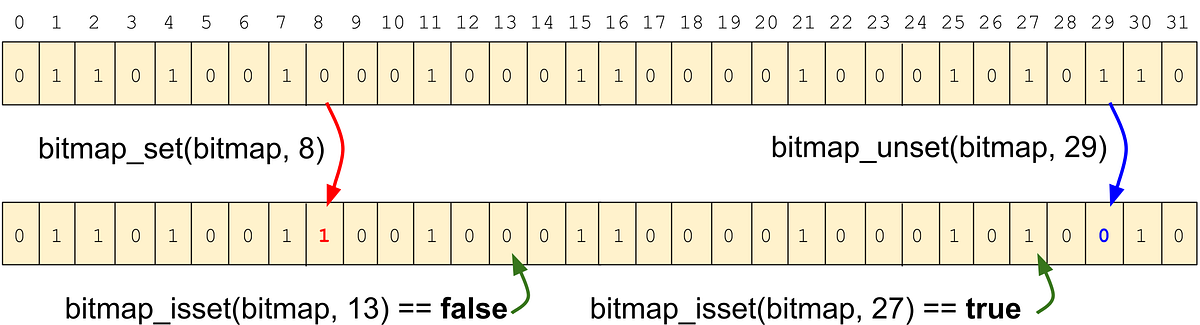

The context window of LLMs is a (currently) limited space where it processes information. This window has a limited bandwith, but should include the right information and tools, in the right format, at the right time for an LLM to perform a task. This is now known under Context Engineering.

It is a delicate balance “of filling the context window with just the right information for the next step" - AK. Too little context, and the agent fails. Too much or irrelevant context, and costs rise while performance can decrease and the most powerful source for that "right information" is the agent's own memory/context.