Operationalize vector search with Pinecone and Feast Feature Store

Vector embeddings are the key ingredient that makes similarity search possible. Raw data goes from a data store or data stream, through an embedding model to be converted into a vector embedding, and finally into the vector search index.

If you have multiple data sources, frequent data updates, and are constantly experimenting with different models, then it becomes harder to maintain an accurate and up-to-date search index. That could lead to subpar results in your recommender systems, search applications, or wherever you are using vector search.

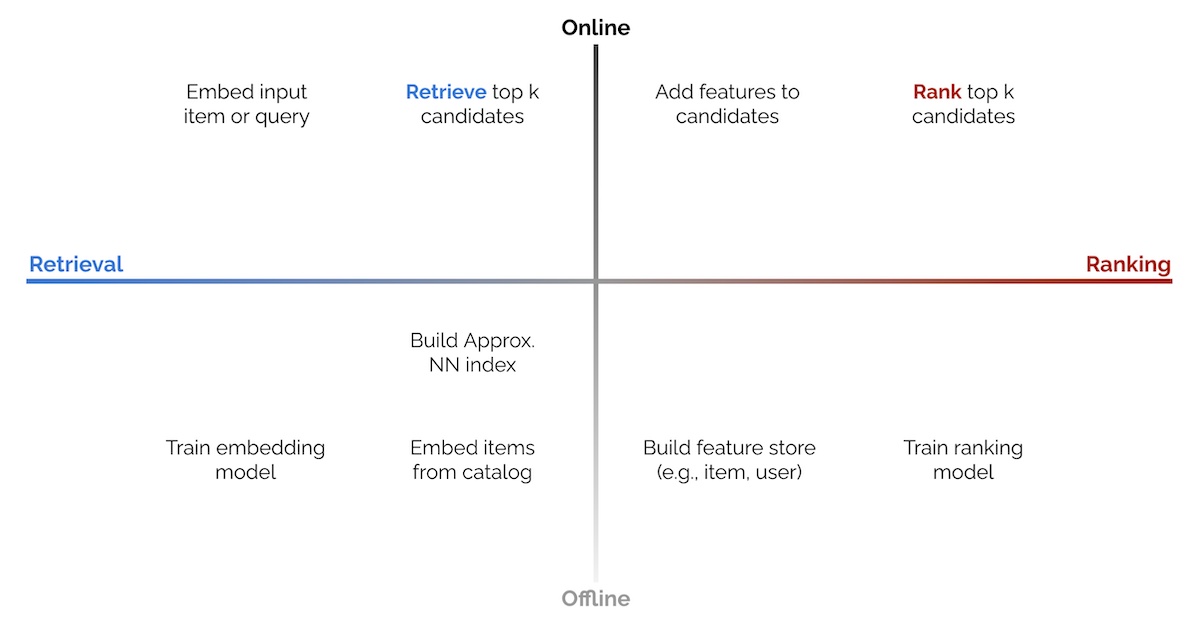

How you store and manage the assets — vector embeddings — is crucial to the accuracy and freshness of your vector search results. This is where “feature stores” come in. Features stores provide a centralized place for managing vector embeddings within organizations with sprawling data sources and frequently updated models. They enable efficient feature engineering and management, feature reuse, and consistency between online and batch embedding models.

Combining a feature store with a similarity search service leads to more accurate and reliable retrieval within your AI/ML applications. In this article, we will build a question-answering application to demonstrate how the Feast feature store can be used alongside Pinecone vector search solution.