Radiance Surfaces: Optimizing Surface Representations with a 5D Radiance Field Loss | RGL

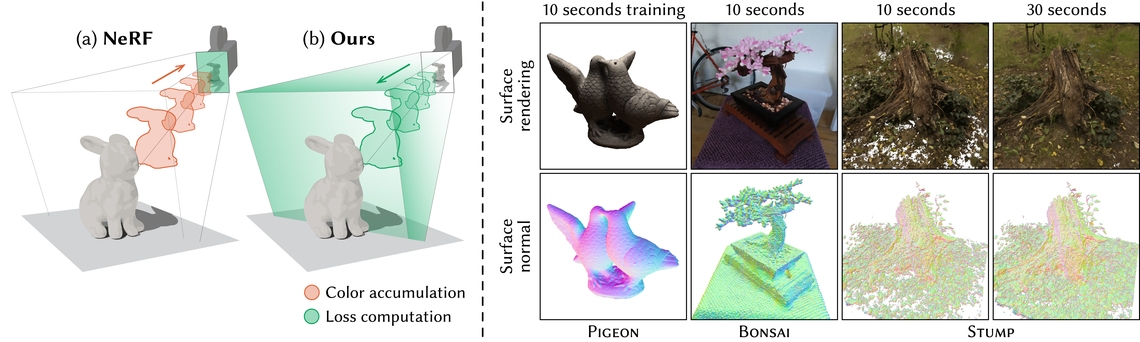

Our method reconstructs surfaces with the speed and robustness of NeRF-style methods. Left: In contrast to volume-based methods that minimize 2D image losses, as shown in (a), we adopt a spatio-directional radiance field loss formulation, as shown in (b). At each step, our method considers a distribution of optically independent surfaces, increasing the confidence of candidates that agree with the reference imagery. Right: A meaningful surface can be extracted at any iteration during optimization.

We present a fast and simple technique to convert images into a radiance surface-based scene representation. Building on existing radiance volume reconstruction algorithms, we introduce a subtle yet impactful modification of the loss function requiring changes to only a few lines of code: instead of integrating the radiance field along rays and supervising the resulting images, we project the training images into the scene to directly supervise the spatio-directional radiance field.