Stochastic Lifestyle

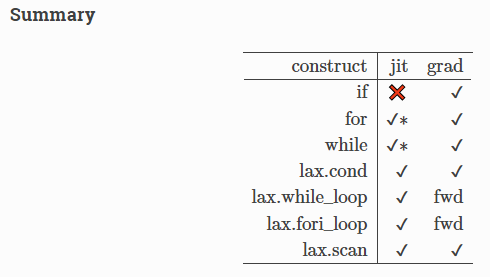

In some previous blog posts we described in details how one can generalize automatic differentiation to give automatically stability enhancements and all sorts of other niceties by incorporating graph transformations into code generation. However, one of the things which we didn't go into too much is the limitation of these types of algorithms. This limitation is what we have termed "quasi-static" which is the property that an algorithm can be reinterpreted as some static algorithm. It turns out that for very fundamental reasons, this is the same limitation that some major machine learning frameworks impose on the code that they can fully optimize, such as Jax or Tensorflow. This led us to the question: are there algorithms which are not optimizable within this mindset, and why? The answer is now published at ICML 2021, so lets dig into this higher level concept.

First of all, lets come up with a concrete idea of what a quasi-static algorithm is. It's the space of algorithms which in some way can be re-expressed as a static algorithm. Think of a "static algorithm" as one which has a simple mathematical description that does not require a full computer description, i.e. no loops, rewriting to memory, etc. As an example, let's take a look at an example from the Jax documentation. The following is something that the Jax JIT works on: