Buckets of Parquet Files are Awful

I understand why: all of the big data tools know how to talk to s3 and dump data in parquet format, so this is pretty easy to set up as a programmer. "customers can ingest that data however they want!"

I am here to say that this sucks for anyone who doesn't have a team dedicated to writing scripts to babysit file transfers. Please stop.

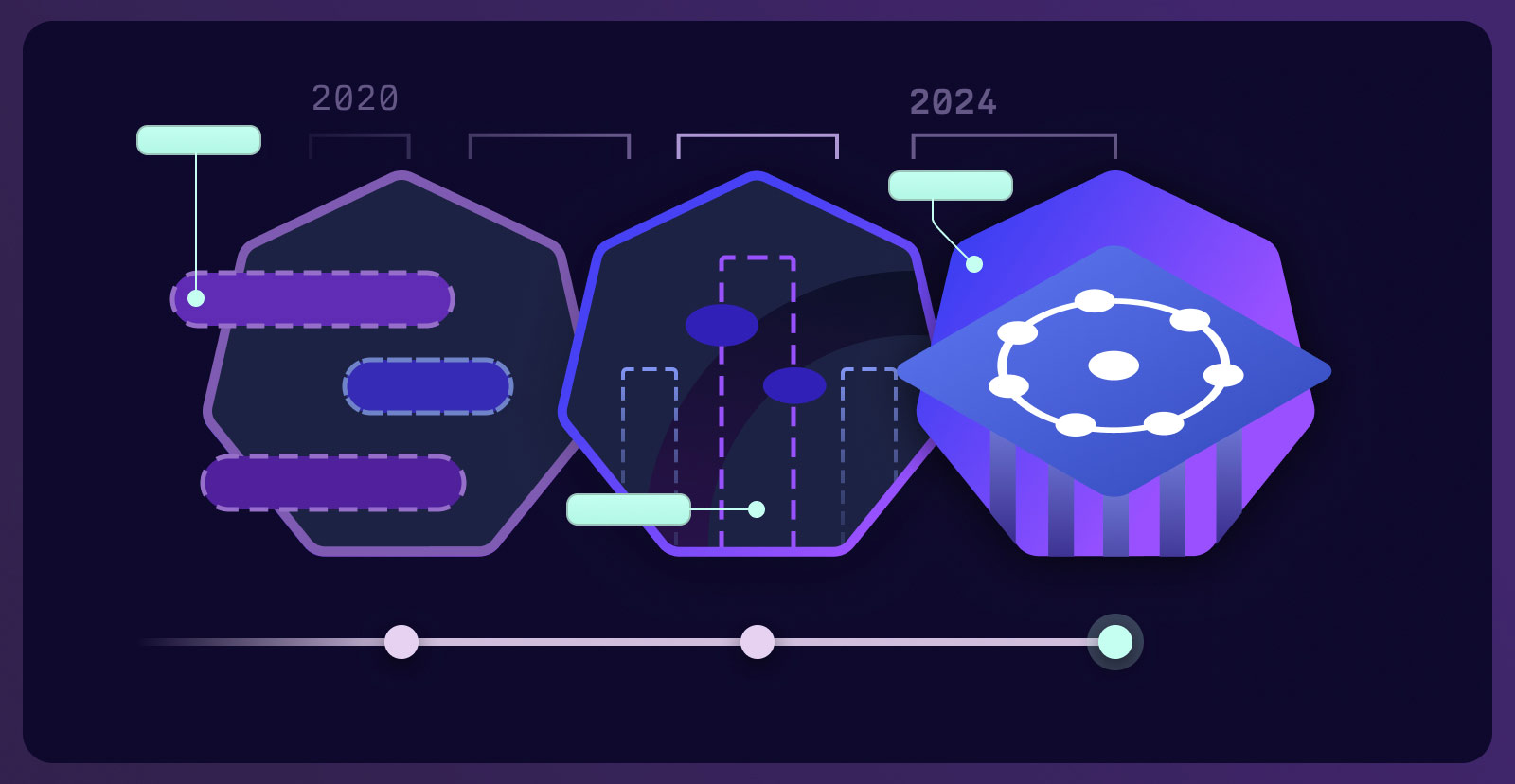

Sometimes my database knows how to speak Parquet but I still need to process it in chunks. Sometimes I can coax the DB into a larger import. There are a hundred optimizations that can be made depending on the tech, but the gist of the solution is the same:

I do not care about data pipelines, airflow, spark, row groups, arrow, kafka, push-down filtering, or any of these other distractions from building.

I want to write software that does stuff, and I want to be able to handle large amounts of data and have the tools just do the right thing.

All of the other solutions are too fucking complicated and just don't work that well. (Listening to the postgres wire protocol for replication? Way too complicated and too much setup. Tools that integrate with 1,000 different products poorly? Come on.)