Giving robots superhuman vision using radio signals

In the race to develop robust perception systems for robots, one persistent challenge has been operating in bad weather and harsh conditions. For example, traditional, light-based vision sensors such as cameras or LiDAR (Light Detection And Ranging) fail in heavy smoke and fog.

However, nature has shown that vision doesn't have to be constrained by light's limitations -- many organisms have evolved ways to perceive their environment without relying on light. Bats navigate using the echoes of sound waves, while sharks hunt by sensing electrical fields from their prey's movements.

Radio waves, whose wavelengths are orders of magnitude longer than light waves, can better penetrate smoke and fog, and can even see through certain materials -- all capabilities beyond human vision. Yet robots have traditionally relied on a limited toolbox: they either use cameras and LiDAR, which provide detailed images but fail in challenging conditions, or traditional radar, which can see through walls and other occlusions but produces crude, low-resolution images.

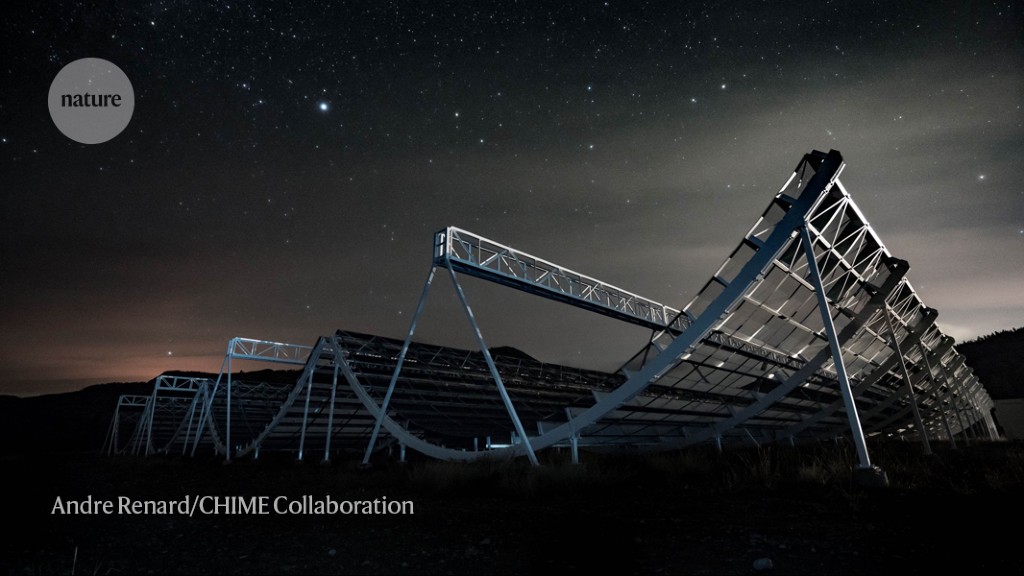

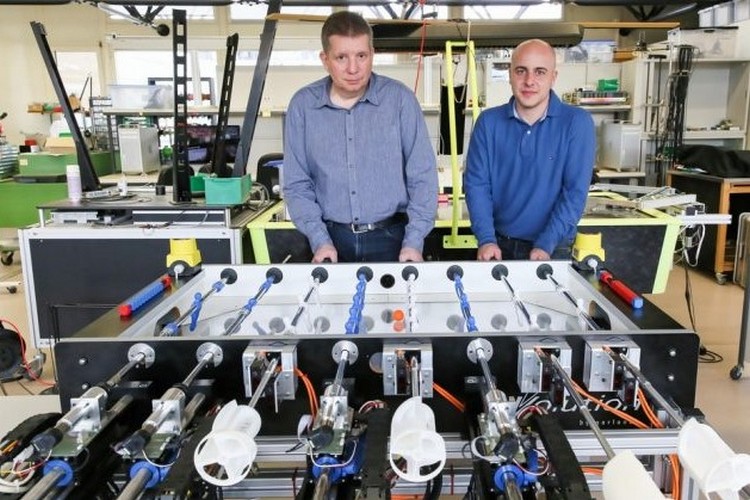

Now, researchers from the University of Pennsylvania School of Engineering and Applied Science (Penn Engineering) have developed PanoRadar, a new tool to give robots superhuman vision by transforming simple radio waves into detailed, 3D views of the environment.