Simon Willison’s Weblog

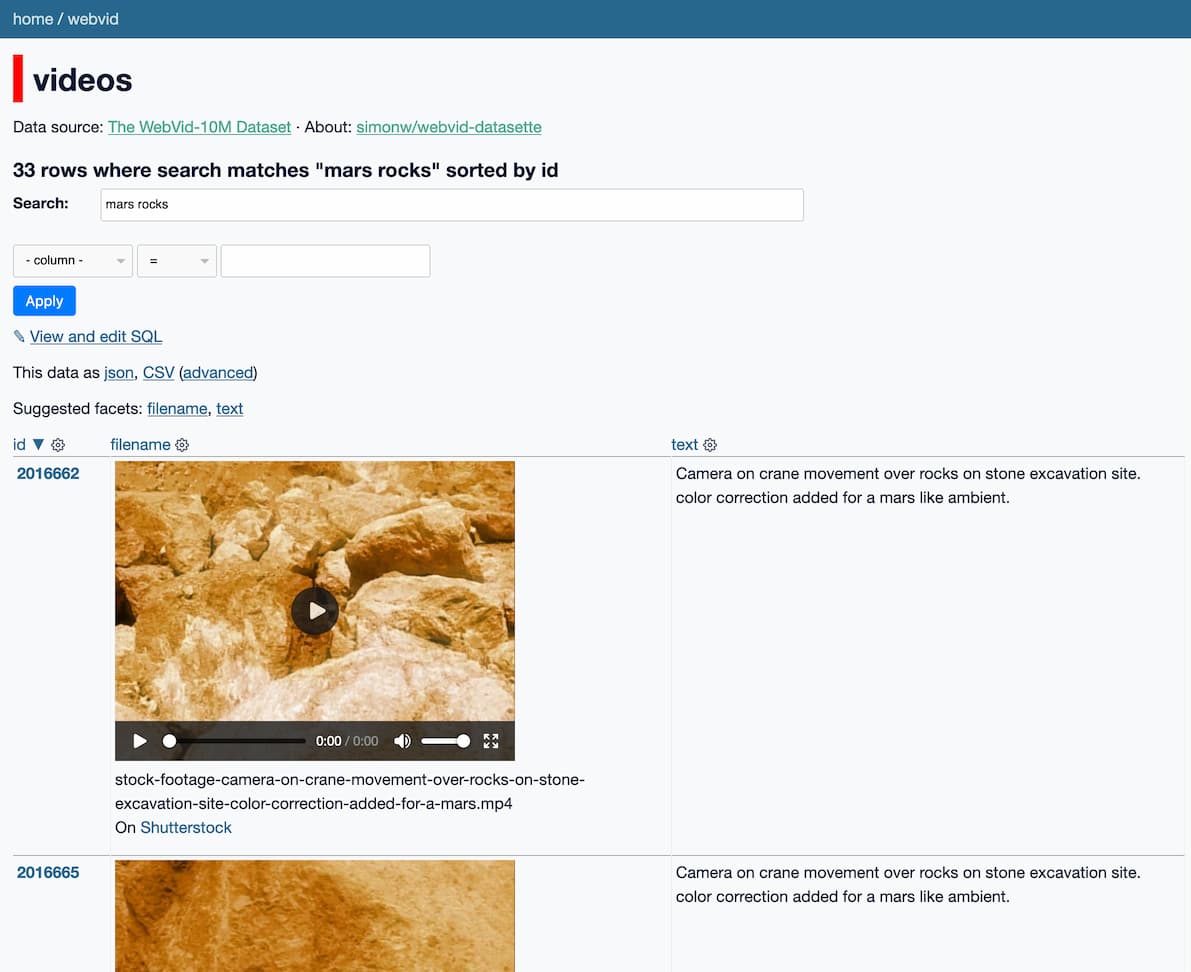

Make-A-Video is a new “state-of-the-art AI system that generates videos from text” from Meta AI. It looks incredible—it really is DALL-E / Stable Diffusion for video. And it appears to have been trained on 10m video preview clips scraped from Shutterstock.

This is similar to the system I built with Andy Baio a few weeks ago to explore the LAION data used to train Stable Diffusion.

Datasets. To train the image models, we use a 2.3B subset of the dataset from (Schuhmann et al.) where the text is English. We filter out sample pairs with NSFW images 2, toxic words in the text, or images with a watermark probability larger than 0.5.

We use WebVid-10M (Bain et al., 2021) and a 10M subset from HD-VILA-100M (Xue et al., 2022) 3 to train our video generation models. Note that only the videos (no aligned text) are used.

The decoder Dt and the interpolation model is trained on WebVid-10M. SRt l is trained on both WebVid-10M and HD-VILA-10M. While prior work (Hong et al., 2022; Ho et al., 2022) have collected private text-video pairs for T2V generation, we use only public datasets (and no paired text for videos). We conduct automatic evaluation on UCF-101 (Soomro et al., 2012) and MSR-VTT (Xu et al., 2016) in a zero-shot setting.