Chinese company trained GPT-4 rival with just 2,000 GPUs — 01.ai spent $3M compared to OpenAI's $80M to $100M

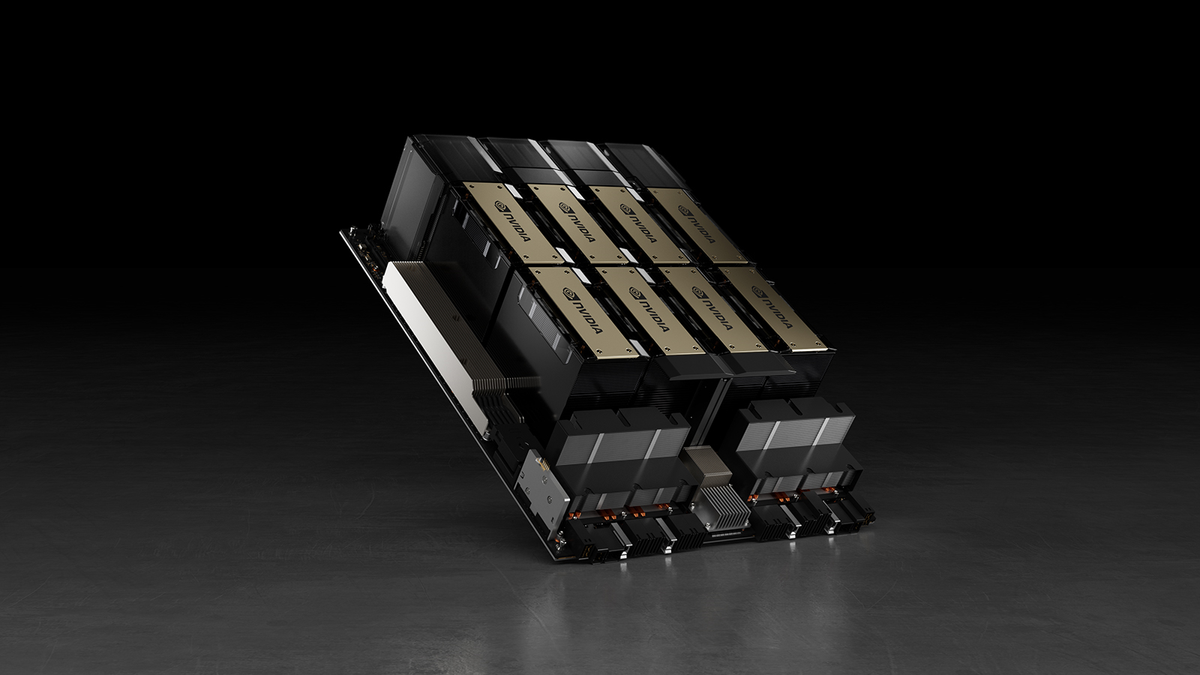

As Chinese entities do not have access to tens of thousands of advanced AI GPUs from companies like Nvidia, companies from this country must innovate to train their advanced AI models. Kai-Fu Lee, the founder and head of 01.ai, said this week that his company has trained one of its advanced AI models using 2,000 GPUs with just $3 million.

“The thing that shocks my friends in the Silicon Valley is not just our performance, but that we trained the model with only $3 million and GPT-4 was trained with $80 to $100 million,” said Kai-Fu Lee (via @tsarnick). “GPT-5 is rumored to be trained with about a billion dollars. […] We believe in scaling law, but when you do excellent detailed engineering, it is not the case. […] As a company in China, first, we have limited access to GPUs due to the U.S. regulations [and a valuation disadvantage compared to American AI companies].”

In contrast to competitors like OpenAI, which spent $80-100 million to train GPT-4 and reportedly up to $1 billion for GPT-5, 01.ai trained its high-performing model with just $3 million, according to Kai-Fu Lee. According to a company website chart, 01.ai's Yi-Lightning holds the sixth position in model performance measured by LMSIS at UC Berkeley.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25803712/HoneyCouponsTrio_LQ.jpg)