OpenAI's o1 probably does more than just elaborate step-by-step prompting

OpenAI claims to have found a way to scale AI capabilities by scaling inference processing power. By taking advantage of increased computational resources and allowing for longer response times, 01 is expected to deliver superior results. This would open up new possibilities for AI scaling.

While the model was trained from scratch using the popular step-by-step inference method, its improved performance is likely due to additional factors.

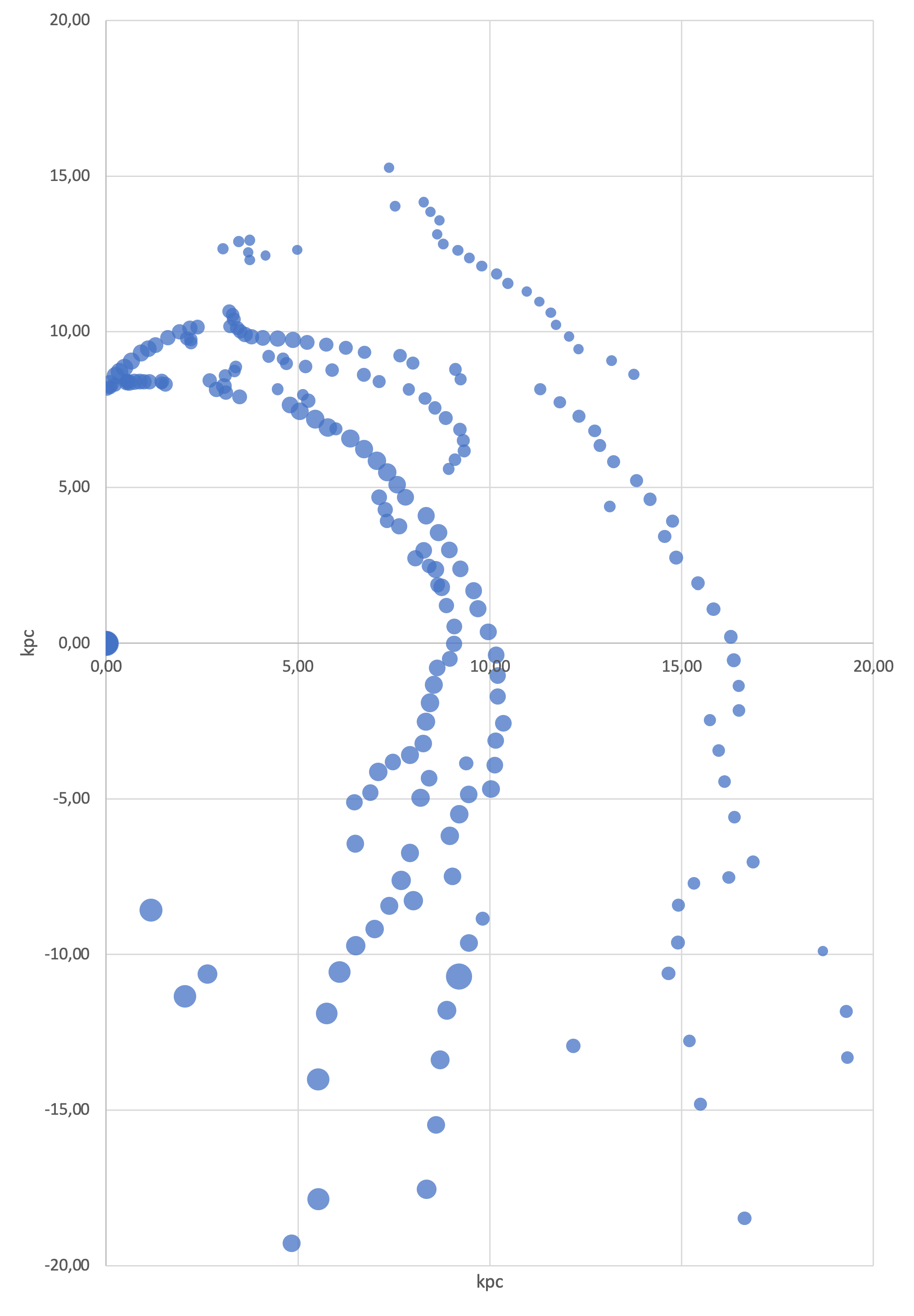

Researchers at Epoch AI recently attempted to match the performance of o1-preview on a challenging scientific multiple-choice benchmark called GPQA (Graduate-Level Google-Proof Q&A Benchmark).

They used GPT-4o with two prompting techniques (Revisions and Majority Voting) to generate a large number of tokens, similar to o1's "thought process".

The results showed that while generating more tokens led to slight improvements, no amount of tokens could come close to o1-preview's performance. Even with a high token count, GPT-4o variants' accuracy remained significantly lower than o1-preview's.