Meta gives Llama 3 vision, now if only it had a brain

Hands on Meta has been influential in driving the development of open language models with its Llama family, but up until now, the only way to interact with them has been through text.

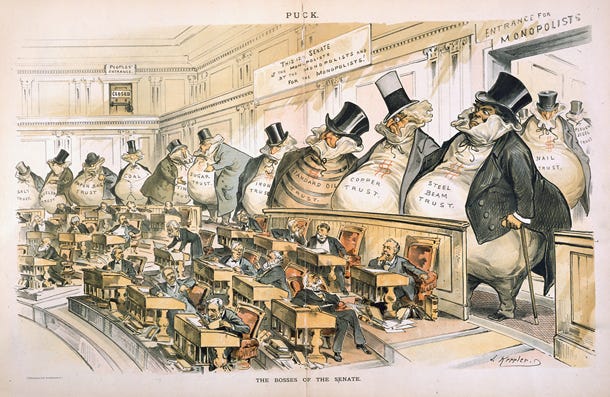

According to Meta, these models can now use a combination of images and text prompts to "deeply understand and reason on the combination." For example, the vision models could be used to generate appropriate keywords based on the contents of an image, chart, or graphics, or extract information from a PowerPoint slide.

You can ask this openly available model, which can be locally run not just in the cloud, not only what's in a picture, but ask questions or make a request about that content.

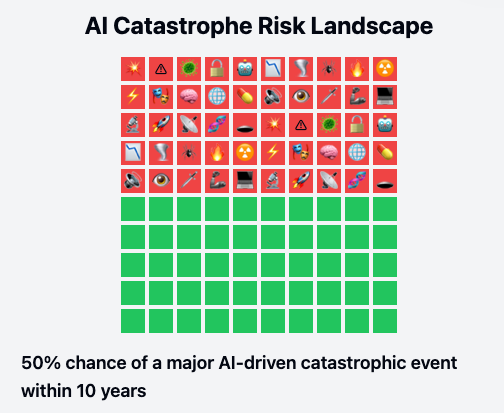

That said, in our testing, we found that, just like the scarecrow in the Wizard of Oz, what this model could really use is a brain.

Meta's vision models are available in both 11 and 90 billion parameter variants, and have been trained on a large corpus of image and text pairs.