Nvidia's MLPerf submission shows B200 offers up to 2.2x training performance of H100

Analysis Nvidia offered the first look at how its upcoming Blackwell accelerators stack up against the venerable H100 in real-world training workloads, claiming up to 2.2x higher performance.

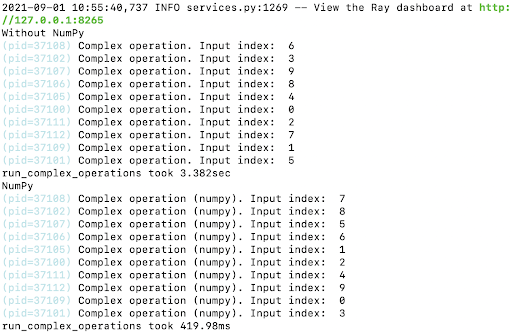

The benchmarks, released as part of this week's MLPerf results, are in line with what we expected from Blackwell at this stage. The DGX B200 systems – used in Nvidia's Nyx supercomputer – boast about 2.27x higher peak floating point performance across FP8, FP16, BF16, and TF32 precisions than last gen's H100 systems.

And this is borne out in the results. Against the H100, the B200 managed 2.2x higher performance when fine-tuning Llama 2 70B and twice the performance when pre-training GPT-3 175B.

However, it's not just raw FLOPS at play here. According to Nvidia, Blackwell's substantially higher memory bandwidth – up to 8 TBps on the flagship parts – also came into play.

"Taking advantage of higher-bandwidth HBM3e memory, just 64 Blackwell GPUs were run in the GPT-3 LLM benchmark without compromising per-GPU performance. The same benchmark run using Hopper needed 256 GPUs to achieve the same performance," the acceleration champ explained in a blog post.