/cdn.vox-cdn.com/uploads/chorus_asset/file/25371644/247078_AI_Lavender_IDF__CVirginia_A.jpg)

Report: Israel used AI to identify bombing targets in Gaza

By Gaby Del Valle , a policy reporter. Her past work has focused on immigration politics, border surveillance technologies, and the rise of the New Right.

Israel’s military has been using artificial intelligence to help choose its bombing targets in Gaza, sacrificing accuracy in favor of speed and killing thousands of civilians in the process, according to an investigation by Israel-based publications +972 Magazine and Local Call.

The system, called Lavender, was developed in the aftermath of Hamas’ October 7th attacks, the report claims. At its peak, Lavender marked 37,000 Palestinians in Gaza as suspected “Hamas militants” and authorized their assassinations.

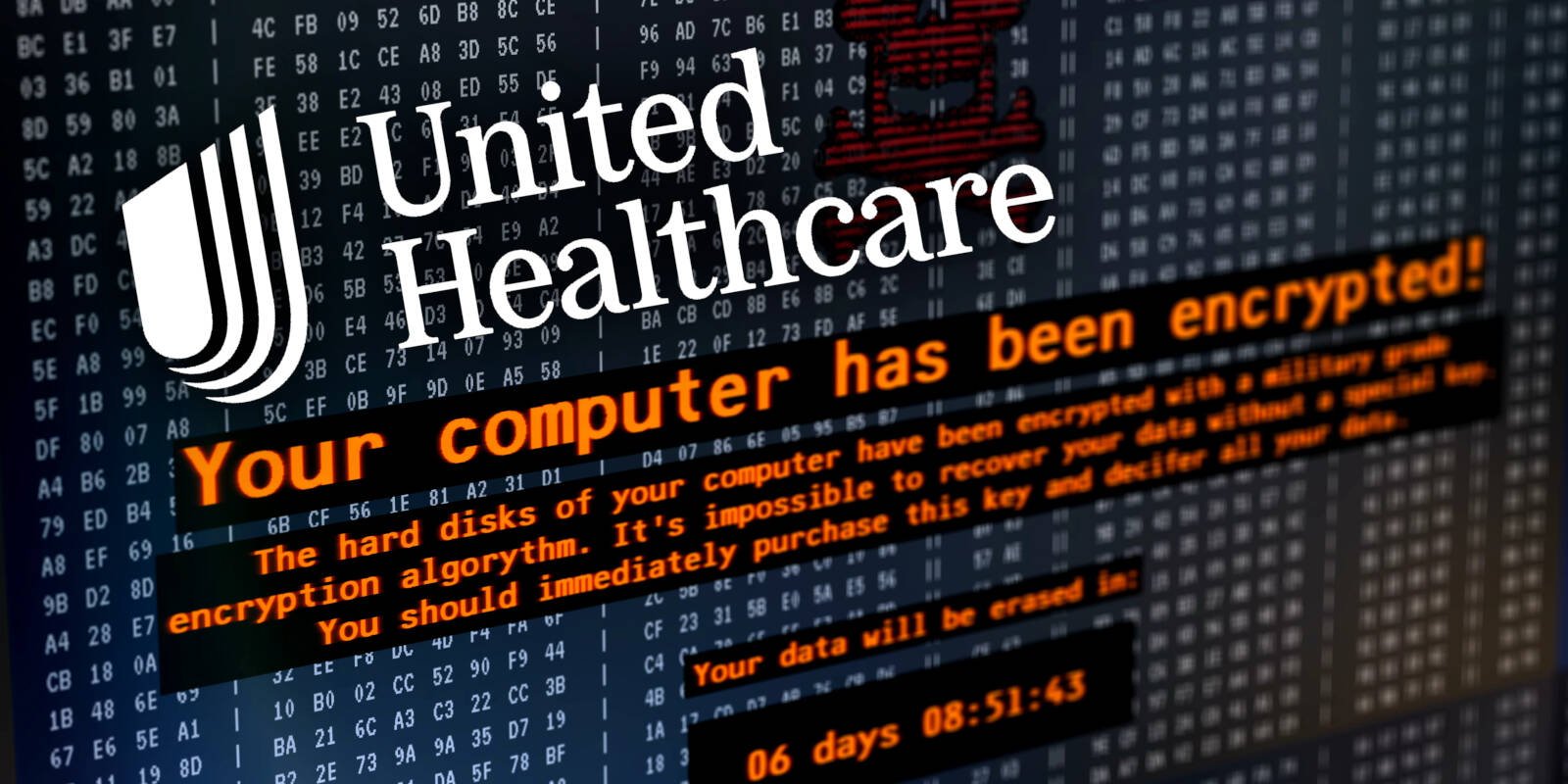

Israel’s military denied the existence of such a kill list in a statement to +972 and Local Call. A spokesperson told CNN that AI was not being used to identify suspected terrorists but did not dispute the existence of the Lavender system, which the spokesperson described as “merely tools for analysts in the target identification process.” Analysts “must conduct independent examinations, in which they verify that the identified targets meet the relevant definitions in accordance with international law and additional restrictions stipulated in IDF directives,” the spokesperson told CNN. The Israel Defense Forces did not immediately respond to The Verge’s request for comment.

In interviews with +972 and Local Call, however, Israeli intelligence officers said they weren’t required to conduct independent examinations of the Lavender targets before bombing them but instead effectively served as “a ‘rubber stamp’ for the machine’s decisions.” In some instances, officers’ only role in the process was determining whether a target was male.