AI Is Like … Nuclear Weapons?

The concern, as Edward Teller saw it, was quite literally the end of the world. He had run the calculations, and there was a real possibility, he told his Manhattan Project colleagues in 1942, that when they detonated the world’s first nuclear bomb, the blast would set off a chain reaction. The atmosphere would ignite. All life on Earth would be incinerated. Some of Teller’s colleagues dismissed the idea, but others didn’t. If there were even a slight possibility of atmospheric ignition, said Arthur Compton, the director of a Manhattan Project lab in Chicago, all work on the bomb should halt. “Better to accept the slavery of the Nazi,” he later wrote, “than to run a chance of drawing the final curtain on mankind.”

I offer this story as an analogy for—or perhaps a contrast to—our present AI moment. In just a few months, the novelty of ChatGPT has given way to utter mania. Suddenly, AI is everywhere. Is this the beginning of a new misinformation crisis? A new intellectual-property crisis? The end of the college essay? Of white-collar work? Some worry, as Compton did 80 years ago, for the very future of humanity, and have advocated pausing or slowing down AI development; others say it’s already too late.

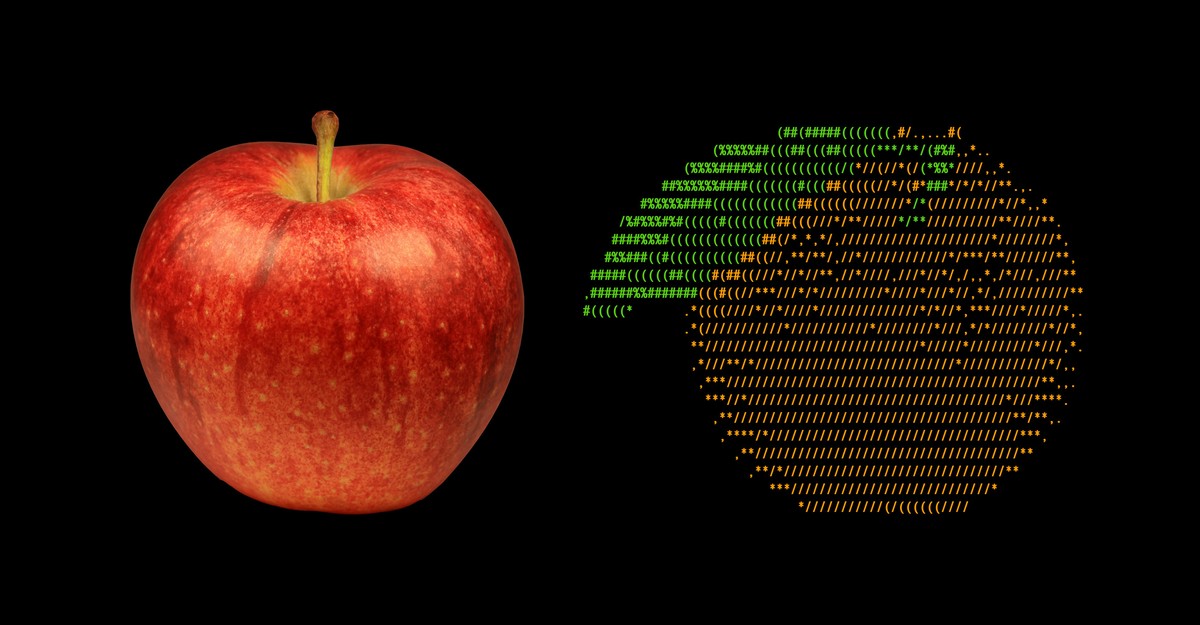

In the face of such excitement and uncertainty and fear, the best one can do is try to find a good analogy—some way to make this unfamiliar new technology a little more familiar. AI is fire. AI is steroids. AI is an alien toddler. (When I asked for an analogy of its own, GPT-4 suggested Pandora’s box—not terribly reassuring.) Some of these analogies are, to put it mildly, better than others. A few of them are even useful.