She didn’t get an apartment because of an AI-generated score – and sued to help others avoid the same fate

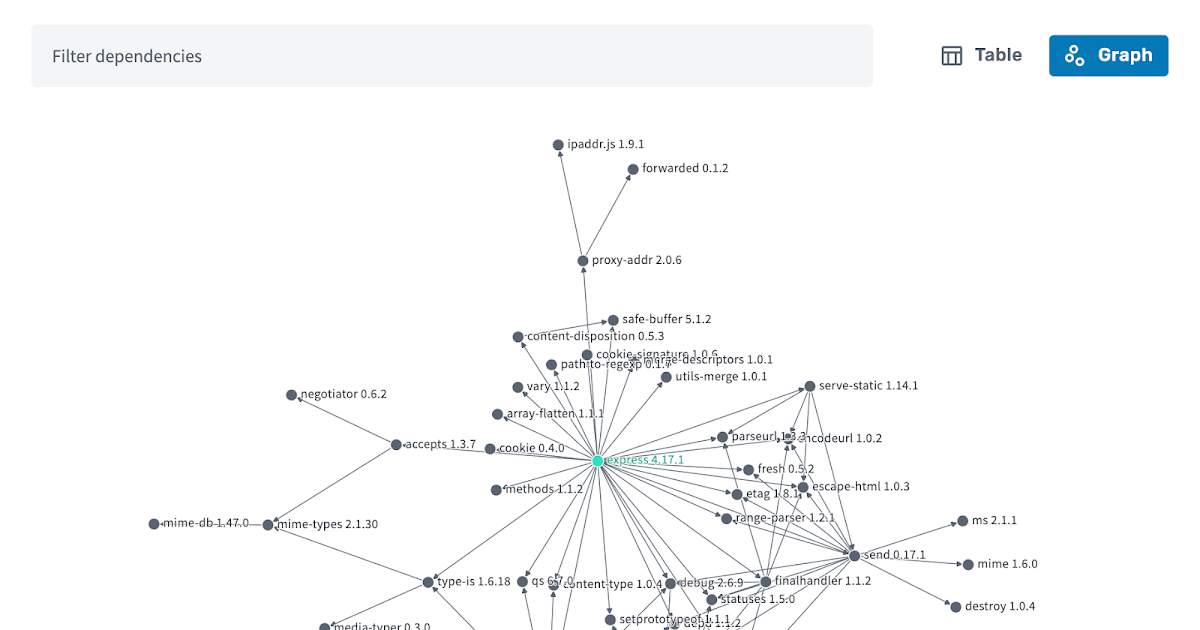

T hree hundred twenty-four. That was the score Mary Louis was given by an AI-powered tenant screening tool. The software, SafeRent, didn’t explain in its 11-page report how the score was calculated or how it weighed various factors. It didn’t say what the score actually signified. It just displayed Louis’s number and determined it was too low. In a box next to the result, the report read: “Score recommendation: DECLINE”.

Louis, who works as a security guard, had applied for an apartment in an eastern Massachusetts suburb. At the time she toured the unit, the management company said she shouldn’t have a problem having her application accepted. Though she had a low credit score and some credit card debt, she had a stellar reference from her landlord of 17 years, who said she consistently paid her rent on time. She would also be using a voucher for low-income renters, guaranteeing the management company would receive at least some portion of the monthly rent in government payments. Her son, also named on the voucher, had a high credit score, indicating he could serve as a backstop against missed payments.

But in May 2021, more than two months after she applied for the apartment, the management company emailed Louis to let her know that a computer program had rejected her application. She needed to have a score of at least 443 for her application to be accepted. There was no further explanation and no way to appeal the decision.