Prompt engineering: building prompts from business cases ru en

As you know, one of the features of my news reader is automatic tag generation using LLMs. That's why I periodically do prompt engineering — I want tags to be better and monthly bills to be lower.

So, I fine-tuned the prompts to the point where everything seems to work, but there's still this nagging feeling that something's off: correct tags are determined well, but in addition to them, many useless ones are created, and sometimes even completely wrong ones.

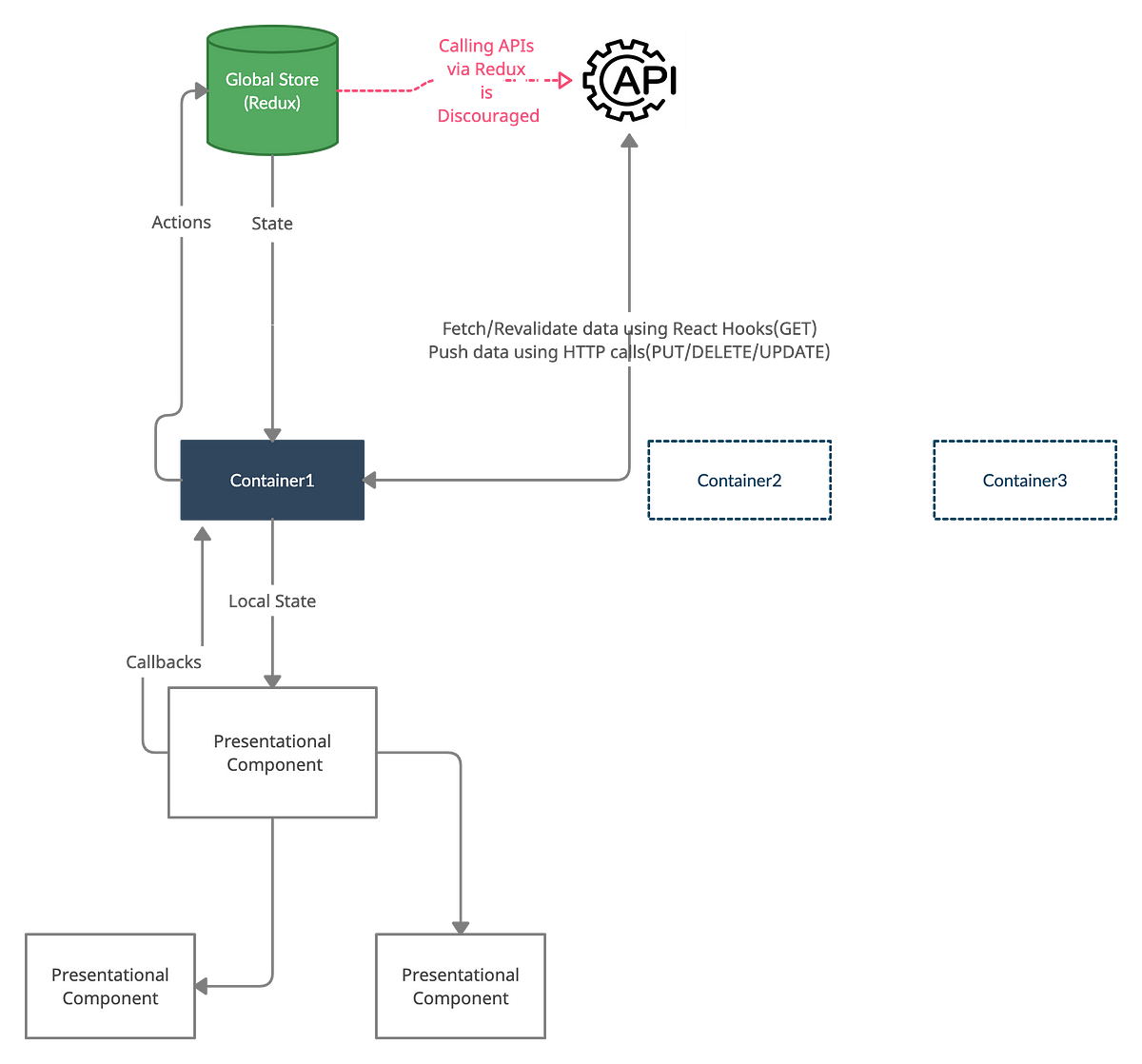

Options 1 and 2 were out of the question due to lack of time and money. Also, my current strategy is to rely exclusively on ready-made AI solutions since keeping up with the industry alone is impossible. So, I had no choice but to go with the third option.

Progress was slow, but after a recent post about generative knowledge bases, something clicked in my head, the problem turned inside out, and over the course of the morning, I drafted a new prompt that’s been performing significantly better so far.

Both options negatively affected the news sorting rules. In the first case, unnecessary rules were triggered, while in the second, the necessary ones weren’t. The result was the same — reduced quality of news sorting => worse user experience.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25739860/1998630585.jpg)