Distilling Knowledge in Neural Networks

I absolutely agree! However, deploying an ensemble of heavyweight models may not always be feasible in many cases. Sometimes, your single model could be so large (GPT-3, for example) that deploying it in resource-constrained environments is often not possible. This is why we have been going over some of model optimization recipes - Quantization and Pruning. This report is the last one in this series. In this report, we will discuss a compelling model optimization technique - knowledge distillation. I have structured the report into the following sections -

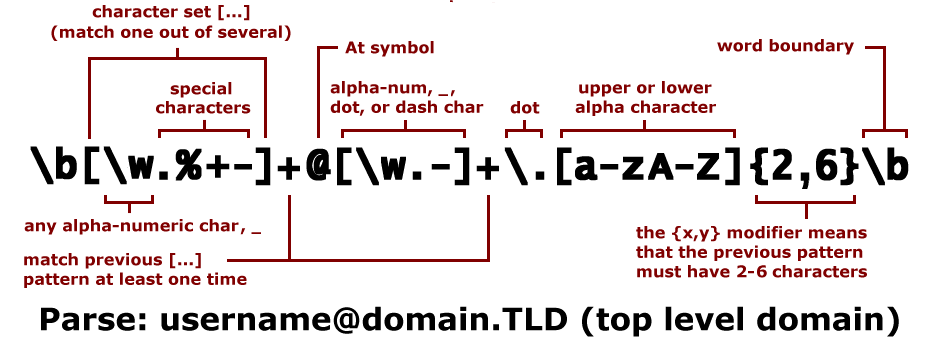

When working with a classification problem, it is very typical to use softmax as the last activation unit in your neural network. Why is that? Because a softmax function takes a set of logits and spits out a probability distribution over the discrete classes, your network is being trained on. Figure 1 presents an example of this.

In Figure 1, our imaginary neural network is highly confident that the given image is $1$. However, it also thinks that there is a slight chance it could be $7$ as well. It is thinking quite right, isn’t it? The given image does have subtle seven-ish characteristics. This information would not have been available if we were only dealing with hard one-hot encoded labels like [1, 0] (where 1 and 0 are probabilities of the image being a one and a seven respectively).