How Systems Complexity Reduces Uptime

The pace of change in IT is unlike any other discipline. Even dog years are long by comparison. It’s an environment that makes us so primed for what comes next that we often don’t pause to ask why. This is very much the case with cloud computing, where cloud is the sine qua non of any CIO’s strategy and leads to vague approaches such as ‘Cloud First’.

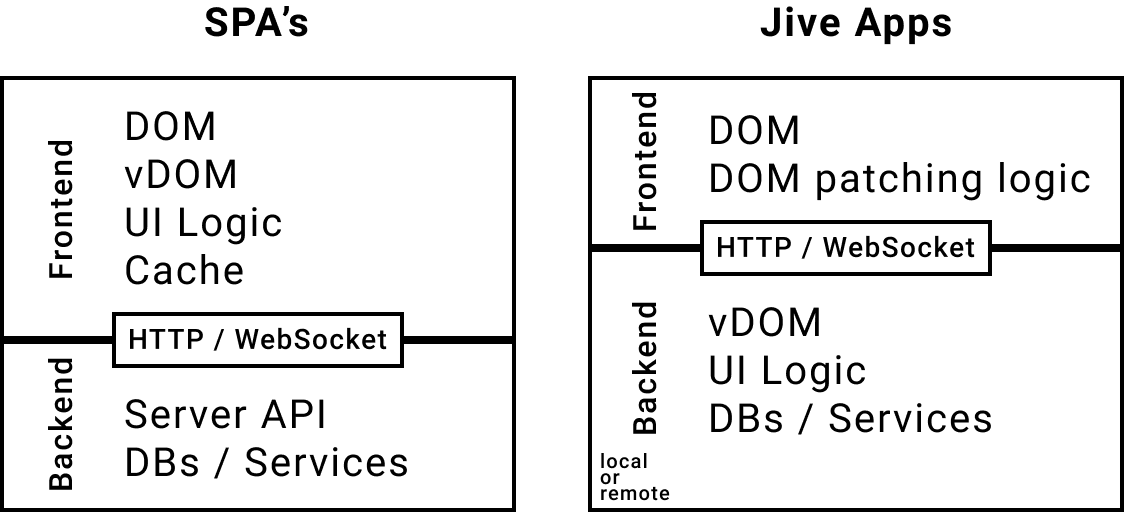

This phenomenon also applies to application architecture, where microservices and composability are all the rage. The underlying rationale makes sense: break complex applications into function-specific components and assemble the pieces you need. After all, this is what software engineers do; they create and implement libraries of functionality that can be assembled in almost limitless ways. The result is an integrated collection of components are more elegant than the monolithic applications they replace.

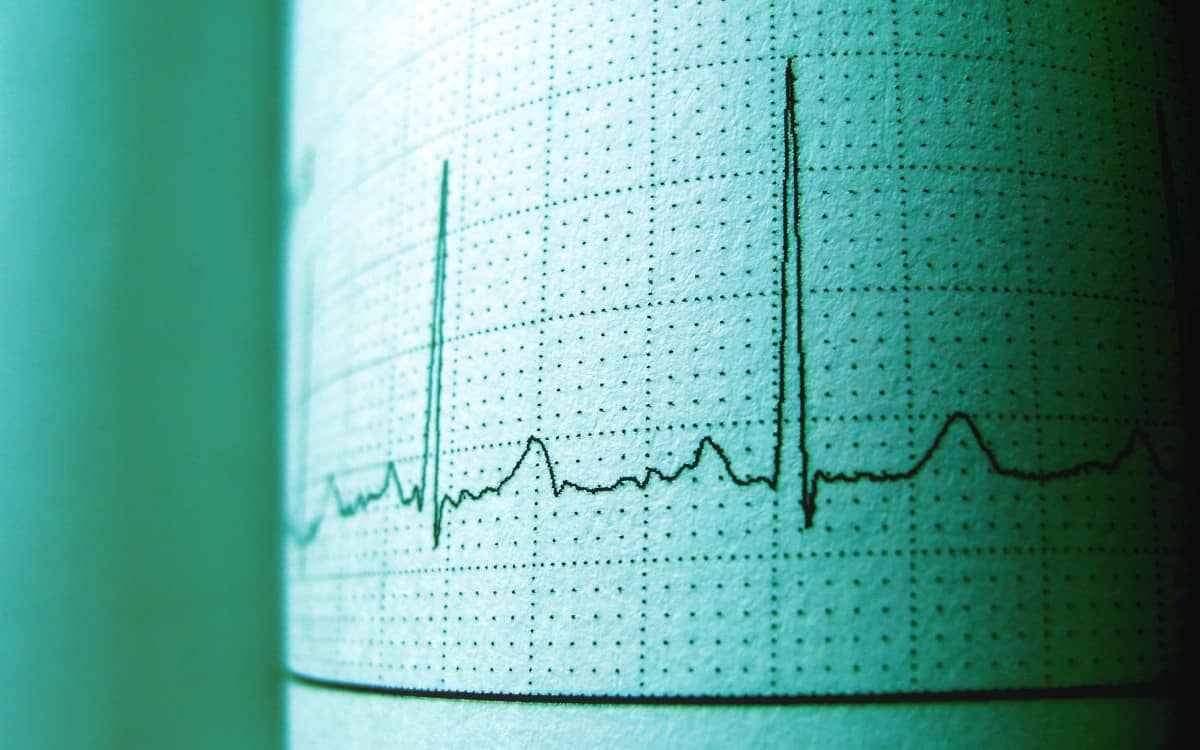

Technical elegance, though, isn’t always better. To illustrate why, we need to turn to probability – not the complex stuff like Bayesian decision theory or even Poisson distributions. I’m talking about availability, which in IT means the percentage of time a system is able to perform its function. A system that is 99.9% (‘three-nines’) available can perform its function all but 0.1 per cent of the time. Pretty good, right? Of course the answer to that question is, it depends. Some applications are fine with three-nines. For others, four-nines, five-nines, and even higher are more appropriate. How you would feel, for instance, about getting on an airplane if there were a 1-in-1000 chance of “downtime”?