Taming "Lazy" ChatGPT : Strategies for Comprehensive and Reliable Outputs

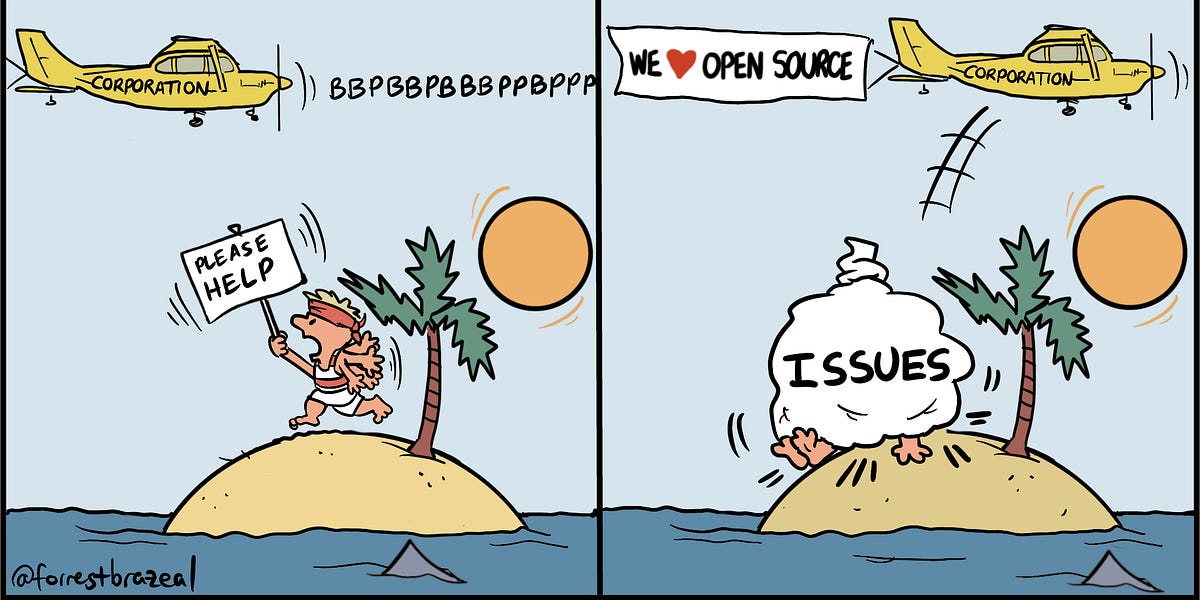

You're a developer or technical professional who relies on AI language models like GPT-4 to assist with coding tasks, research, and analysis. But lately, you've been noticing a frustrating trend - the AI seems to be getting lazier and less reliable. It provides truncated code snippets with placeholders instead of complete implementations. It omits crucial details or forgets entire sections of examples you provide. It confuses functions and parameters, invents non-existent properties, and makes silly mistakes that force you to spend more time manually fixing its output than if you'd just done it yourself from the start.

You've tried everything - tweaking the temperature and top-p sampling settings, adding verbose prompts with explicit instructions, even lecturing the AI sternly. But no matter what you do, it keeps dropping the ball, leaving you with half-baked, inconsistent responses that require heavy reworking. The whole point of leveraging AI was to save time and reduce your workload, but now you're spending more effort babysitting and course-correcting than you are getting productive assistance.

The inconsistency is maddening - one moment the AI will blow you away with a brilliant, thorough implementation. The next, it will choke on a seemingly simpler task, providing an incomplete, low-effort output filled with head-scratching omissions and errors. You've started questioning whether the latest AI models are actually a step backwards in terms of coding reliability.