Reasoning through arguments against taking AI safety seriously

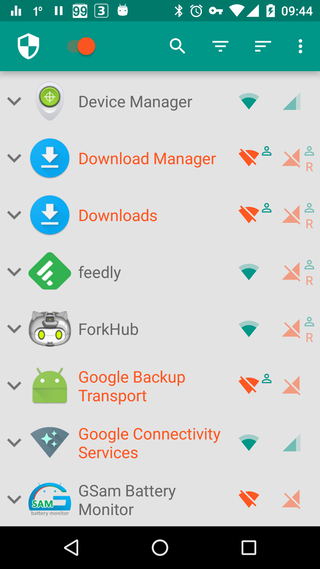

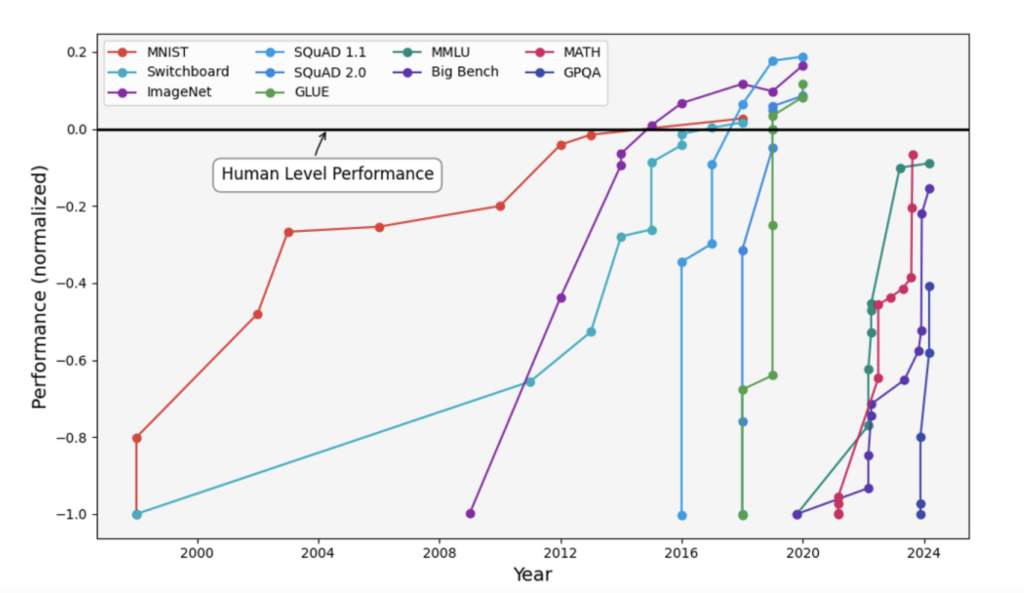

About a year ago, a few months after I publicly took a stand with many other peers to warn the public of the dangers related to the unprecedented capabilities of powerful AI systems, I posted a blog post entitled FAQ on Catastrophic AI Risks as a follow-up to my earlier one about rogue AIs, where I started discussing why AI safety should be taken seriously. In the meantime, I participated in numerous debates, including many with my friend Yann LeCun, whose views on some of these issues are very different from mine. I also learned a lot more about AI safety, how different groups of people think about this question as well as the diversity of views about regulation and the efforts of powerful lobbies against it. The issue is so hotly debated because the stakes are major: According to some estimates, quadrillions of dollars of net present value are up for grabs, not to mention political power great enough to significantly disrupt the current world order. I published a paper on multilateral governance of AGI labs and I spent a lot of time thinking about catastrophic AI risks and their mitigation, both on the technical side and the governance and political side. In the last seven months, I have been chairing (and continue to chair) the International Scientific Report on the Safety of Advanced AI (“the report”, below), involving a panel of 30 countries plus the EU and UN and over 70 international experts to synthesize the state of the science in AI safety, illustrating the broad diversity of views about AI risks and trends. Today, after an intense year of wrestling with these critical issues, I would like to revisit arguments made about the potential for catastrophic risks associated with AI systems anticipated in the future, and share my latest thinking.

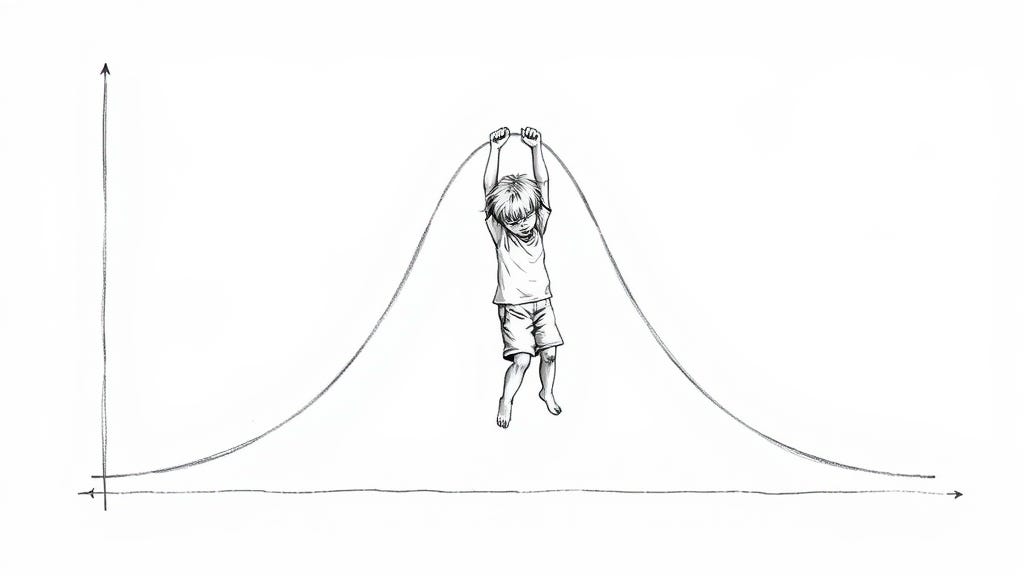

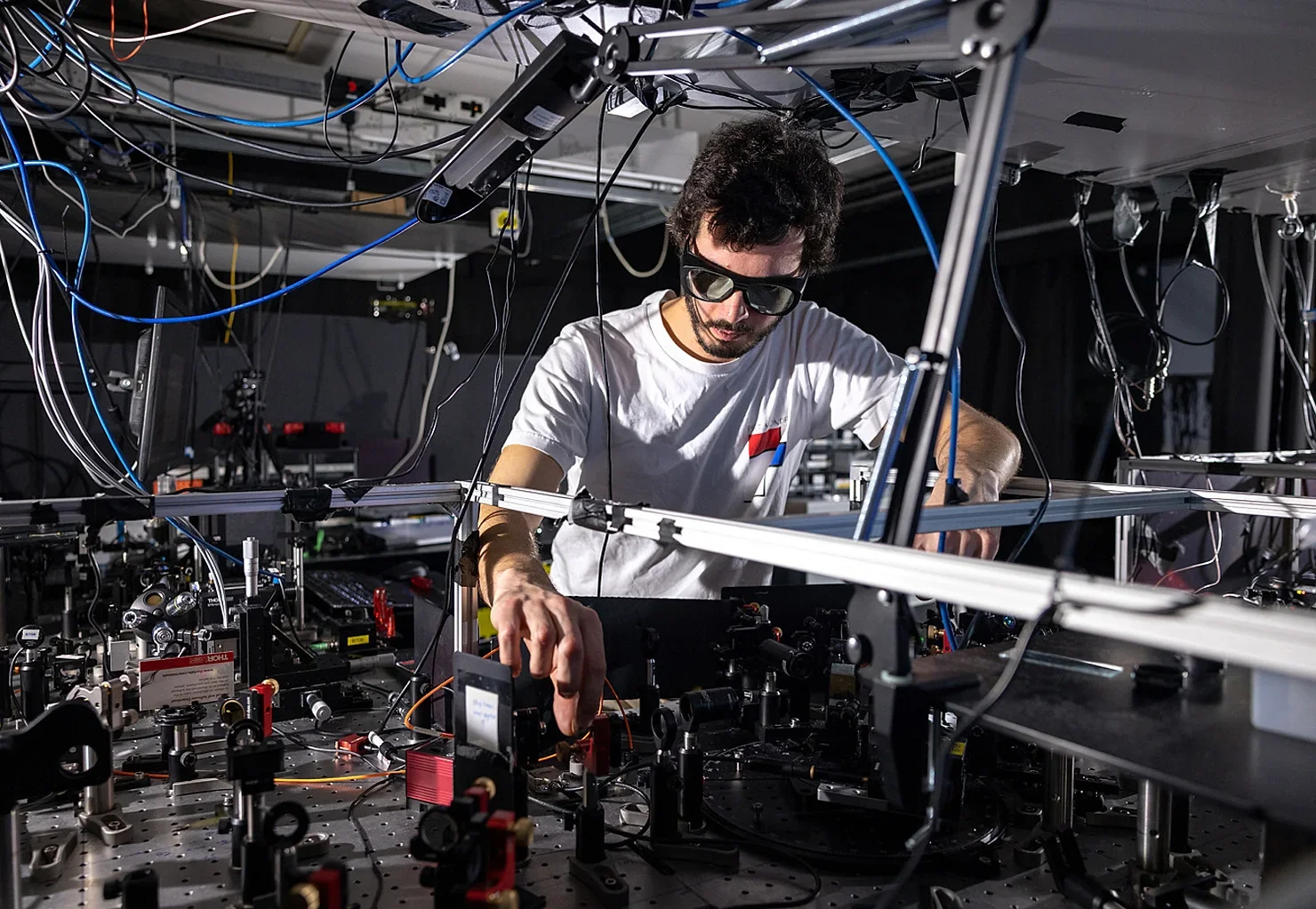

There are many risks regarding the race by several private companies and other entities towards human-level AI (a.k.a. AGI) and beyond (a.k.a. ASI for Artificial Super-Intellingence). Please see “the report” for a broad coverage of risks ranging from current human rights issues to threats on privacy, democracy, copyright, concerns about concentration of economic and political power, and, of course, dangerous misuse. Although experts may disagree on the probability of various outcomes, we can generally agree that some major risks, like the extinction of humanity for example, would be so catastrophic if they happened that they require special attention, if only to make sure that their probability is infinitesimal. Other risks like severe threats to democracies and human rights also deserve much more attention than they are currently getting. The most important thing to realize, through all the noise of discussions and debates, is a very simple and indisputable fact: while we are racing towards AGI or even ASI, nobody currently knows how such an AGI or ASI could be made to behave morally, or at least behave as intended by its developers and not turn against humans. It may be difficult to imagine, but just picture this scenario for one moment:

/cdn.vox-cdn.com/uploads/chorus_asset/file/23951575/VRG_Illo_STK192_L_Normand_LinaKhan_Neutral.jpg)