Reducing Python String Memory Use in Apache Arrow 0.12

Python users who upgrade to recently released pyarrow 0.12 may find that their applications use significantly less memory when converting Arrow string data to pandas format. This includes using pyarrow.parquet.read_table and pandas.read_parquet. This article details some of what is going on under the hood, and why Python applications dealing with large amounts of strings are prone to memory use problems.

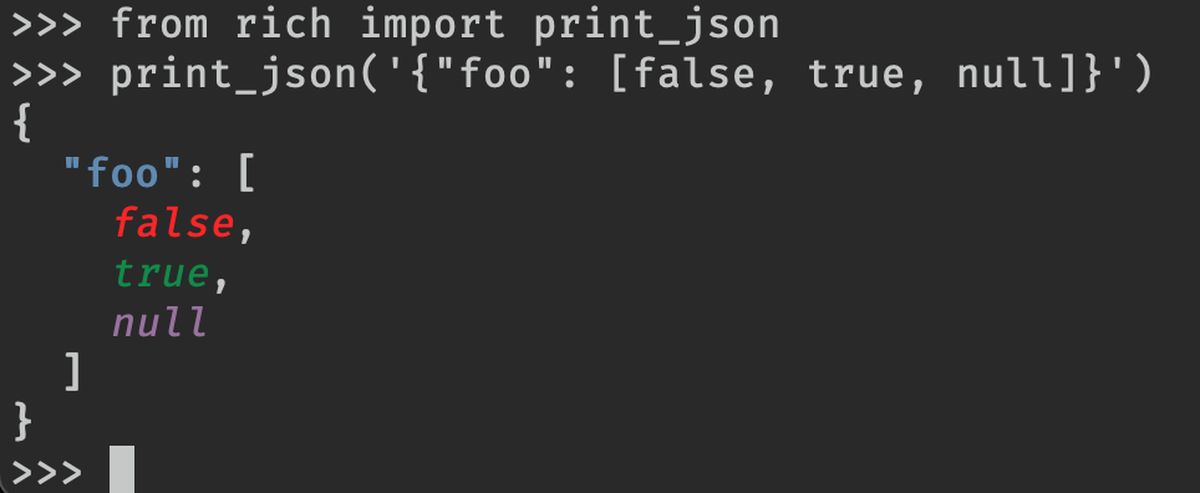

Let’s start with some possibly surprising facts. I’m going to create an empty bytes object and an empty str (unicode) object in Python 3.7:

The sys.getsizeof function accurately reports the number of bytes used by built-in Python objects. You might be surprised to find that:

Since strings in Python are nul-terminated, we can infer that a bytes object has 32 bytes of overhead while unicode has 48 bytes. One must also account for PyObject* pointer references to the objects, so the actual overhead is 40 and 56 bytes, respectively. With large strings and text, this overhead may not matter much, but when you have a lot of small strings, such as those arising from reading a CSV or Apache Parquet file, they can take up an unexpected amount of memory. pandas represents strings in NumPy arrays of PyObject* pointers, so the total memory used by a unique unicode string is

On disk this file would take approximately 10MB. Read into memory, however, it could take up over 60MB, as a 10 character string object takes up 67 bytes in a pandas.Series.