AlphaGo Moment for Model Architecture Discovery - ArXivIQ

Authors: Yixiu Liu, Yang Nan, Weixian Xu, Xiangkun Hu, Lyumanshan Ye, Zhen Qin, Pengfei Liu Paper: https://arxiv.org/abs/2507.18074 Code: https://github.com/GAIR-NLP/ASI-Arch Model: https://gair-nlp.github.io/ASI-Arch

Researchers have developed ASI-ARCH, a fully autonomous AI system that represents the first demonstration of "Artificial Superintelligence for AI research" (ASI4AI). This system moves beyond traditional Neural Architecture Search (NAS) by enabling AI to conduct end-to-end scientific research: it autonomously hypothesizes novel architectural concepts, implements them as code, and empirically validates them through experimentation.

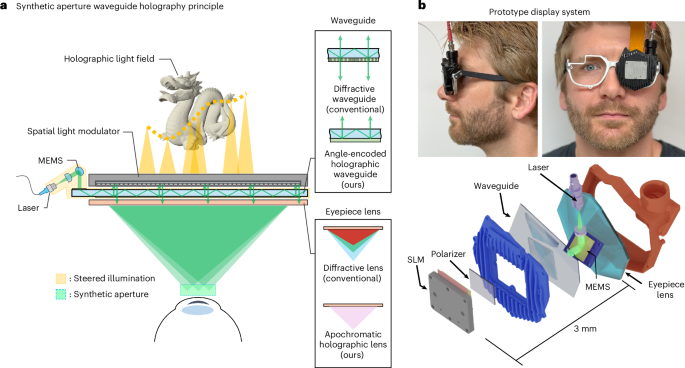

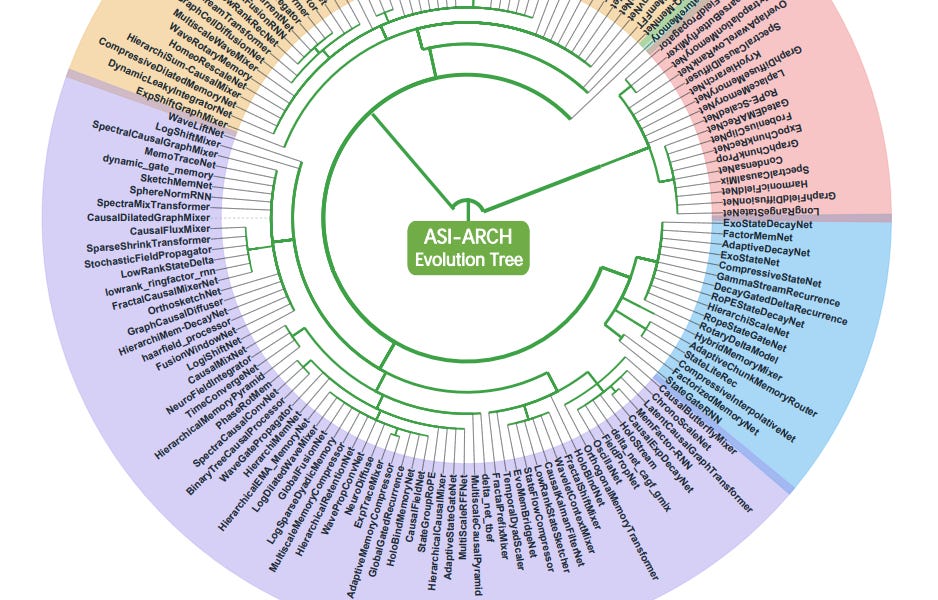

Over 20,000 GPU hours, ASI-ARCH conducted 1,773 autonomous experiments, discovering 106 novel, state-of-the-art (SOTA) linear attention architectures that outperform human-designed baselines like Mamba2. The most significant finding is the establishment of the first empirical scaling law for scientific discovery (Figure 1), demonstrating a strong linear relationship between computational budget and the number of SOTA architectures found. This suggests that the pace of AI research, previously constrained by human cognitive capacity, can now become a computation-scalable process, marking a potential paradigm shift in how AI itself is advanced.

While the capabilities of AI systems have grown exponentially, the pace of AI research itself has remained stubbornly linear, limited by human creativity, intuition, and bandwidth. This paper addresses this fundamental bottleneck head-on in the critical domain of neural architecture discovery, where each major leap in AI—from CNNs ( LeCun et al., 1995) to Transformers ( Vaswani et al., 2017)—has been driven by architectural breakthroughs. The authors introduce ASI-ARCH, a system designed not just to optimize within known paradigms but to autonomously innovate, creating a new pathway for AI to accelerate its own evolution.