How I run LLMs locally - Abishek Muthian

Before I begin I would like to credit the thousands or millions of unknown artists, coders and writers upon whose work the Large Language Models(LLMs) are trained, often without due credit or compensation.

I have a laptop running Linux with core i9 (32threads) CPU, 4090 GPU (16GB VRAM) and 96 GB of RAM. Models which fit within the VRAM can generate more tokens/second, larger models will be offloaded to RAM (dGPU offloading) and thereby lower tokens/second. I will talk about models in a section below.

It's not necessary to have such beefy computer for running LLMs locally, smaller models would run fine in older GPUs or in CPU albeit slowly and with more hallucinations.

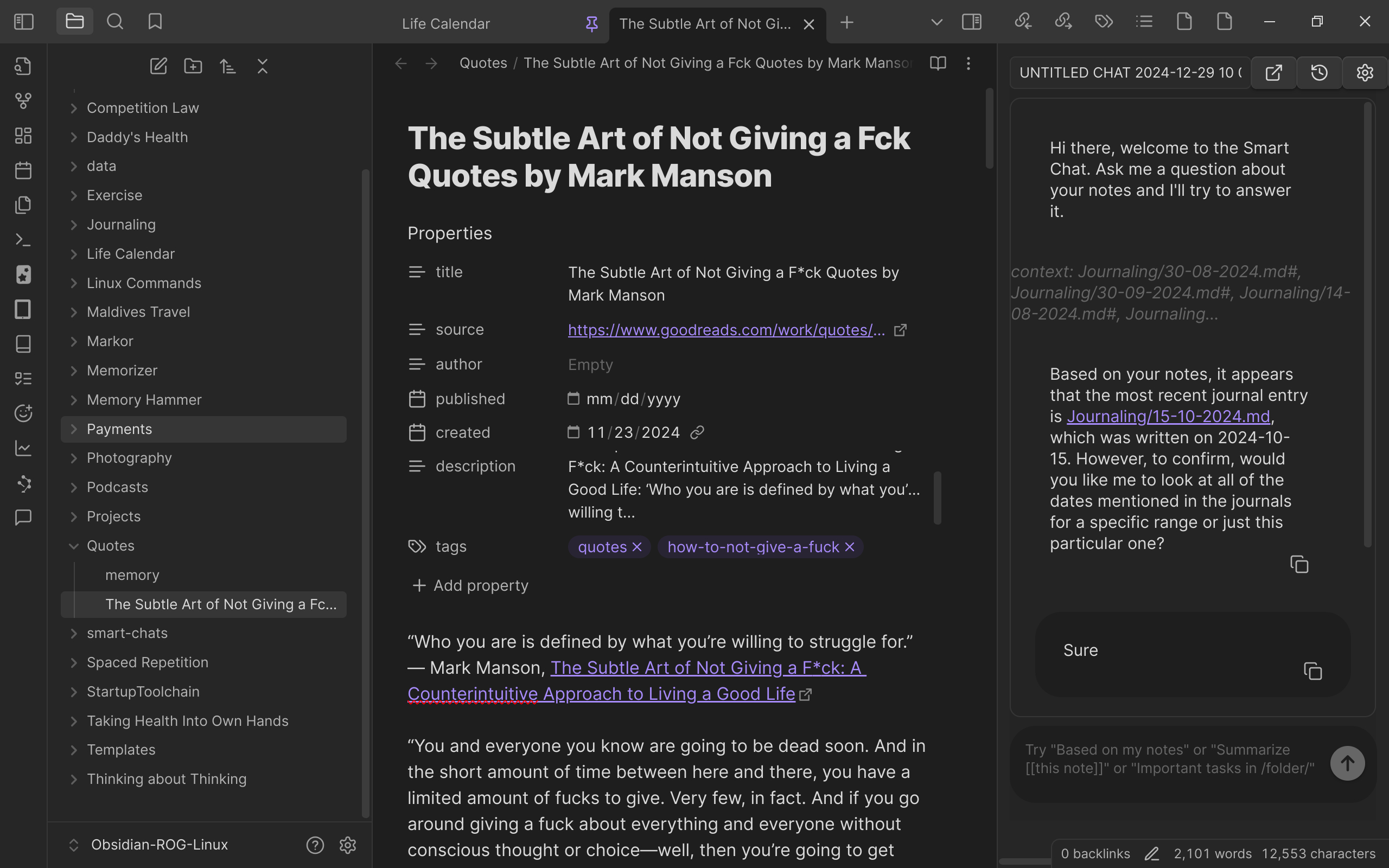

Open WebUI6 is a frontend which offers familiar chat interface for text and image input and communicates with Ollama back-end and streams the output back to the user.

llamafile7 is a single executable file with LLM. It’s probably the easiest way to get started with local LLMs, but I’ve had issues with dGPU offloading in llamafile8.