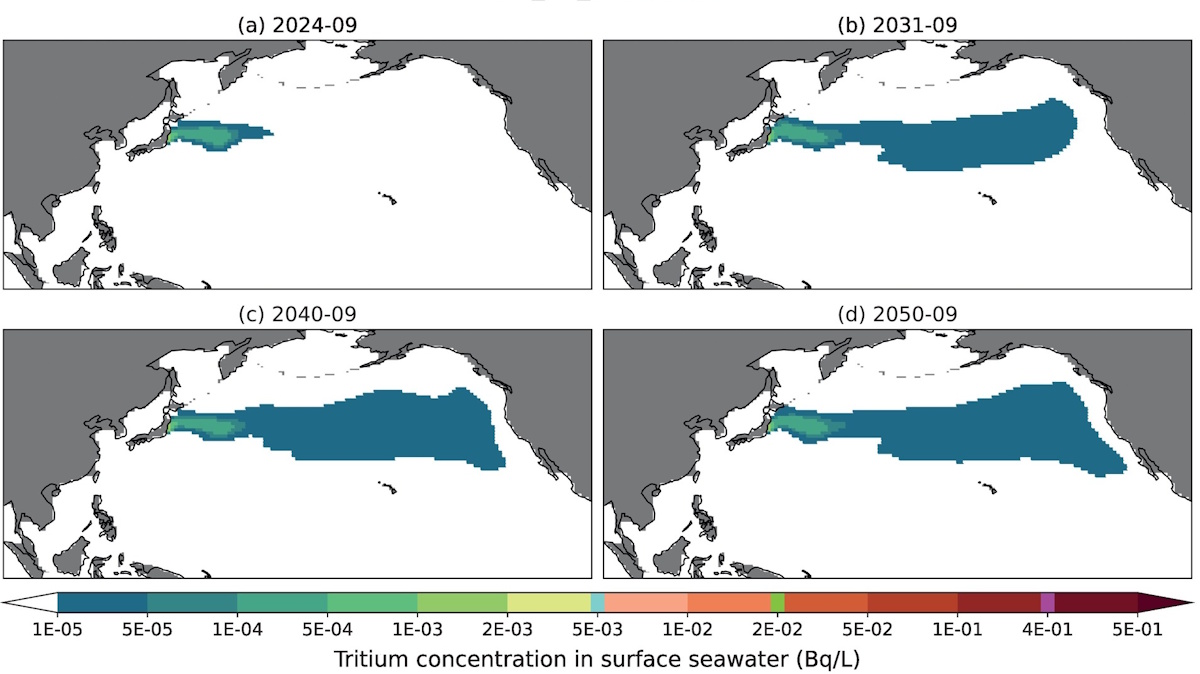

Iceberg I/O performance comparison at scale (Bodo vs PyIceberg, Spark, Daft)

Efficient and scalable Iceberg I/O is crucial for Python data workloads using Iceberg, yet scaling Python to handle large datasets has traditionally involved significant trade-offs. While PyIceberg offers a Pythonic experience, it struggles to scale beyond a single node. Conversely, Spark provides the necessary scalability but lacks native Python ergonomics. More recently, tools like Daft have tried to bridge this gap, but they introduce new APIs instead of Pandas and their scaling and performance capabilities are not clear yet.

The Bodo DataFrame library bridges this gap by acting as a drop-in replacement for Pandas, seamlessly scaling native Python code across multiple cores and nodes using high-performance computing (HPC) techniques. This approach eliminates the need for JVM dependencies or changes in syntax, ensuring an efficient, ergonomic solution.

In this post, we benchmark Iceberg I/O performance across several Python-compatible engines—Bodo, Spark, PyIceberg, and Daft—focusing on reading and writing large Iceberg tables stored in Amazon S3 Tables. Our findings demonstrate that Bodo outperforms Spark by up to 3x, while PyIceberg and Daft were unable to complete the benchmark.