The Cache is Full

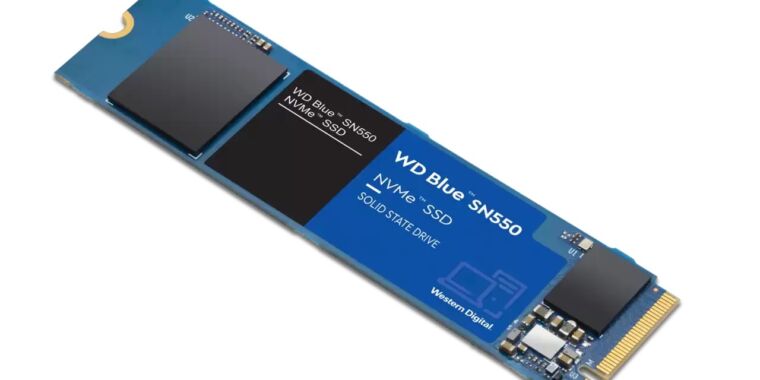

In computing, caching is all over the place. It is found in hardware (CPU and GPU), operating system's virtual memory, buffer pool managers inside databases, in the Web (Content Delivery Networks, browsers, DNS servers ...), and also inside the applications we build. In this post, we take an abstract look of what cache is, the replacement problem that arises from cache's own nature and some algorithms for implementing cache replacement policies.

Independently of the context of where caching is being applied, the pure reason for its existence is to a system have better performance than it would without the cache. A system works "fine" without caching. It is just that by applying this technique the system performs better. And, in the context of caching, perform better means accessing the data that is needed faster.

In order to achieve the performance gain, part of the data is replicated to a secondary storage. Instead of accessing the data through the primary storage, the system accesses through the cache; and the access through the cache is supposed to be more performative. The performance gain of accessing this different storage may arise for multiples reasons. It can be for a technological one. For example, the data in the cache is in memory and the primary storage uses a disk. It can be to avoid overheads. For example, the primary storage can be a relational database, and you add a cache to avoid the overhead of connecting to the database, parsing the SQL query, accessing the disk, and transforming the results to the format that suits the client application. It can be for geographical reasons, as in the case of Content Delivery Networks.