.png)

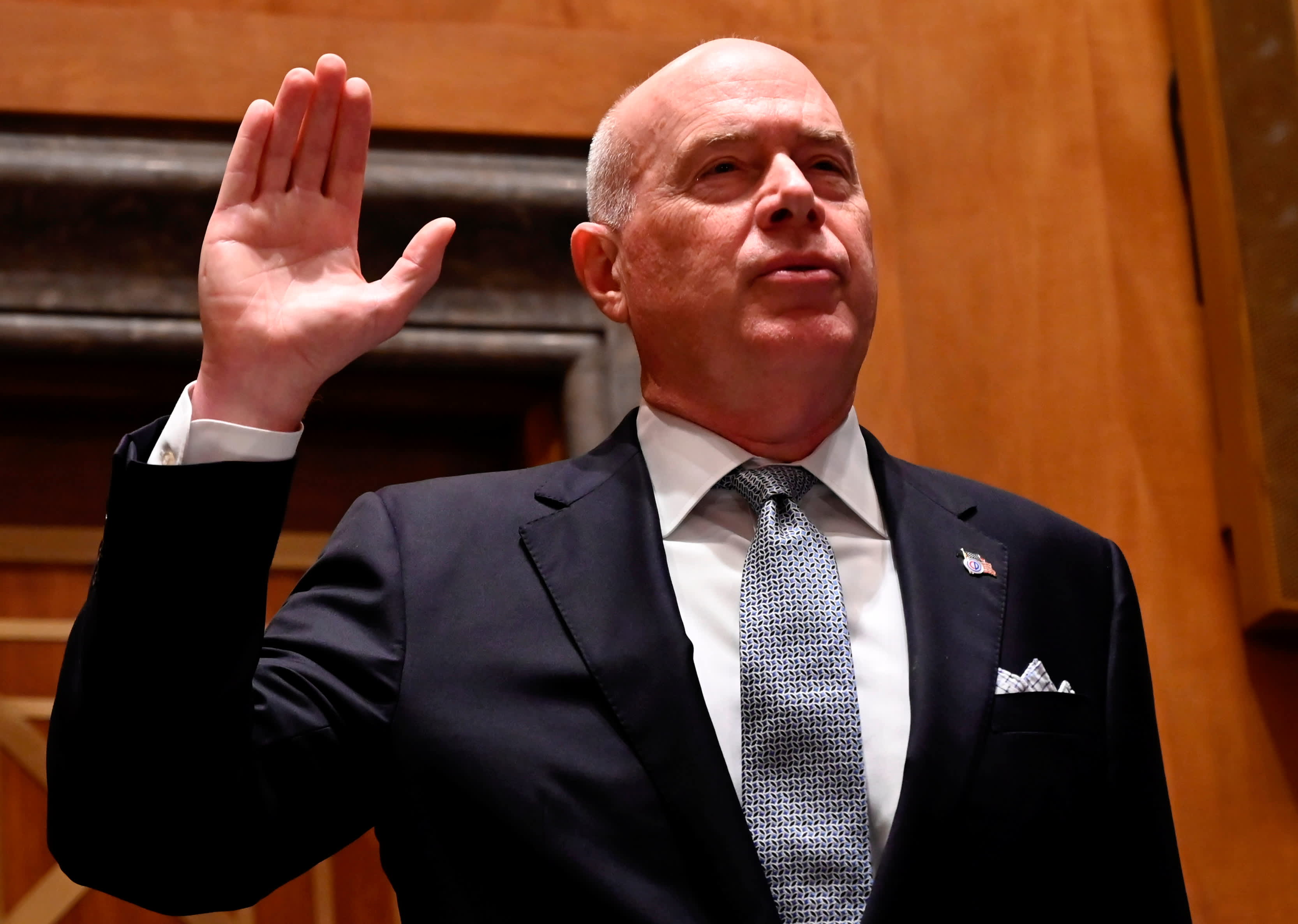

Lethal Injection: How We Hacked Microsoft's Healthcare Chat Bot

We have discovered multiple security vulnerabilities in the Azure Health Bot service, a patient-facing chatbot that handles medical information. The vulnerabilities, if exploited, could allow access to sensitive infrastructure and confidential medical data.

All vulnerabilities have been fixed quickly following our report to Microsoft. Microsoft has not detected any sign of abuse of these vulnerabilities. We want to thank the people from Microsoft for their cooperation in remediating these issues: Dhawal, Kirupa, Gaurav, Madeline, and the engineering team behind the service.

The first vulnerability allowed access to authentication credentials belonging to the customers. With continued research, we’ve found vulnerabilities allowing us to take control of a backend server of the service. That server is shared across multiple customers and has access to several databases that contain information belonging to multiple tenants.

The initial research started at the Azure Health Bot management portal website. Skimming through the features available, we saw that it’s possible to connect your bot to remote data sources, and also provide authentication details.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25406819/STK051_TIKTOK_CVirginia_D.jpg)