Cloud Provider Spot Navigation

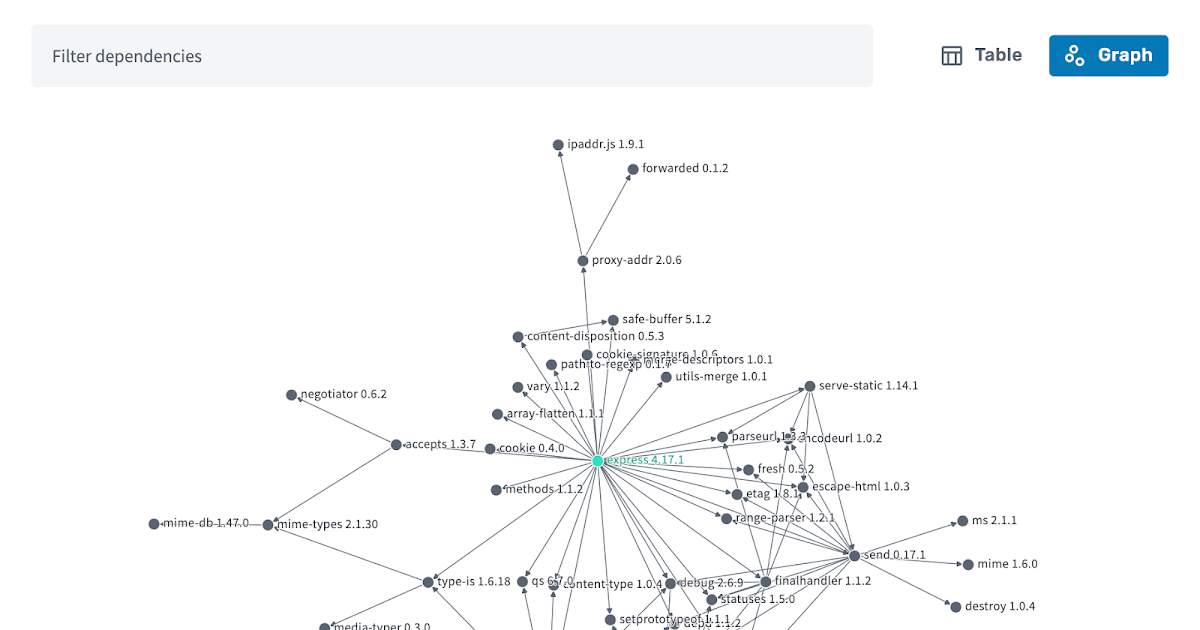

Open Scheduler identifies the cheapest spot GPU offerings of the largest Cloud Providers and rents them on your behalf. Keeping the compute surcharges out of the equasion.

Effortlessly manage and scale your distributed inference clusters. It automatically load-balances your clusters, providing secure and streamlined entry points for seamless scalability.

Models often demand specific GPU setups, which can either be overly expensive or insufficient for optimal performance. Identifying the right requirements can be a tedious task. With our platform, you can bring your own configurations or leverage our carefully curated and tested setups. Start running fine-tuned models or new releases seamlessly and without hassle.

Managing which Subscriptions/Projects are allowed to rent which VMs in what regions of the world can be hard. Gain transparency by scanning your current quotas on your projects.

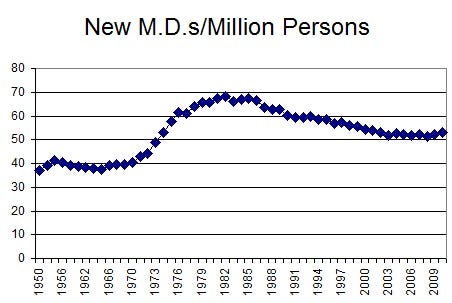

Spin up OnDemand Inference Clusters, make efficient use of the rented compute and bring down inference pricing yourself. Open scheduler helps you keep a good view on spending and most importantly token throughput and inference rates.