Building an open data pipeline in 2024 - by Dan Goldin

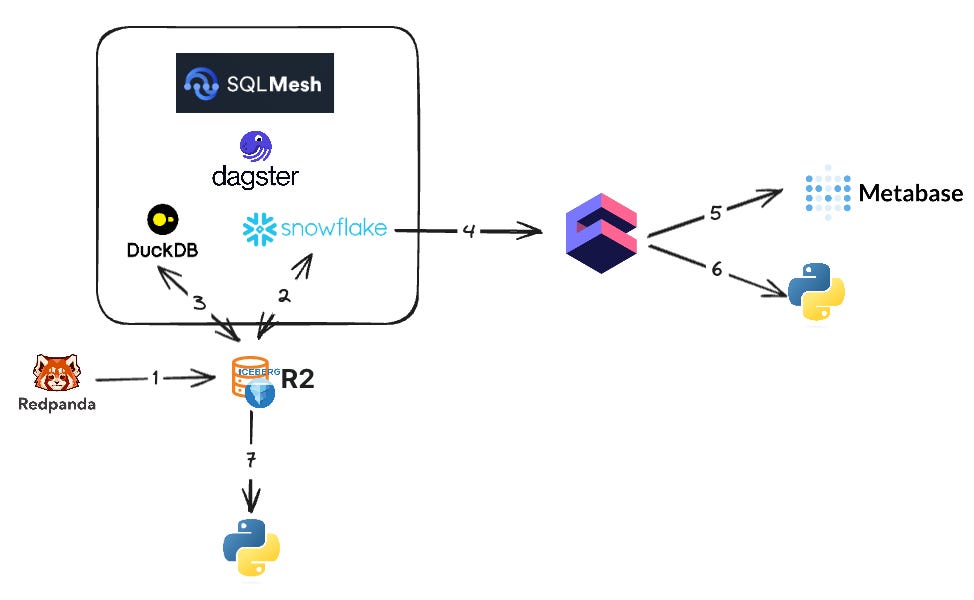

In a previous post on the commoditization of SQL, I touched on the concept of building a data stack that harnesses this approach. One key element of this architecture involves utilizing Iceberg as the core data storage layer, with the flexibility to choose the most suitable compute environment depending on the specific needs of your use case. For instance, if you’re powering BI reporting you’ll often want to prioritize speed so your customers can quickly get the data they need. Conversely, cost may be the biggest factor if you’re working on a batch data pipeline job. Additionally, you may have external data customers in which case you want to prioritize availability over everything else. While there is no one-size-fits-all solution, understanding your unique use cases and requirements allows you to tailor your approach accordingly.

There are a few dimensions here and I want to go through each of them separately although in practice it’s going to be a combination of factors.