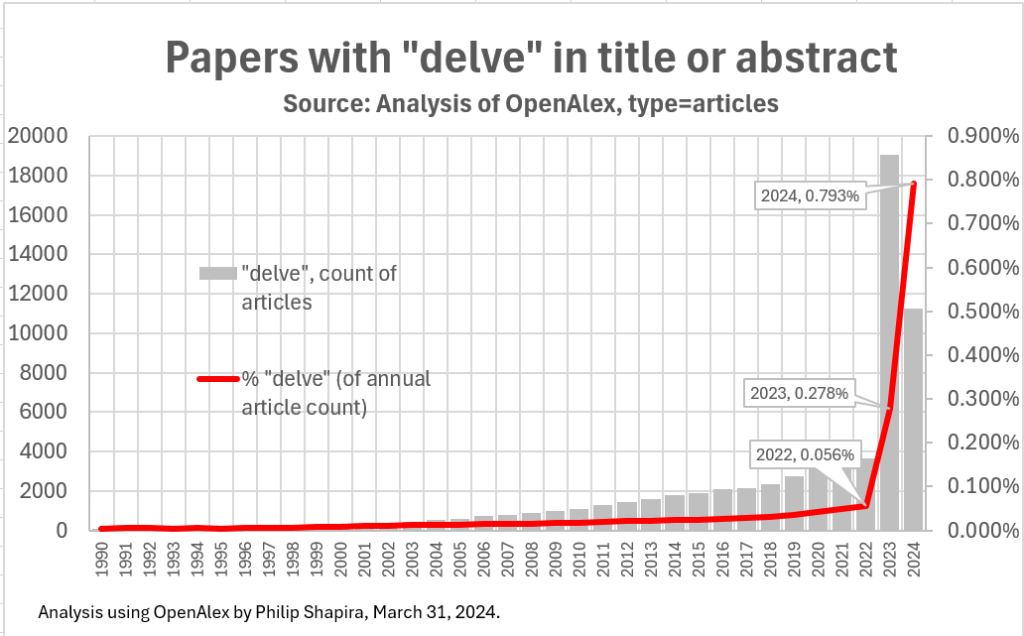

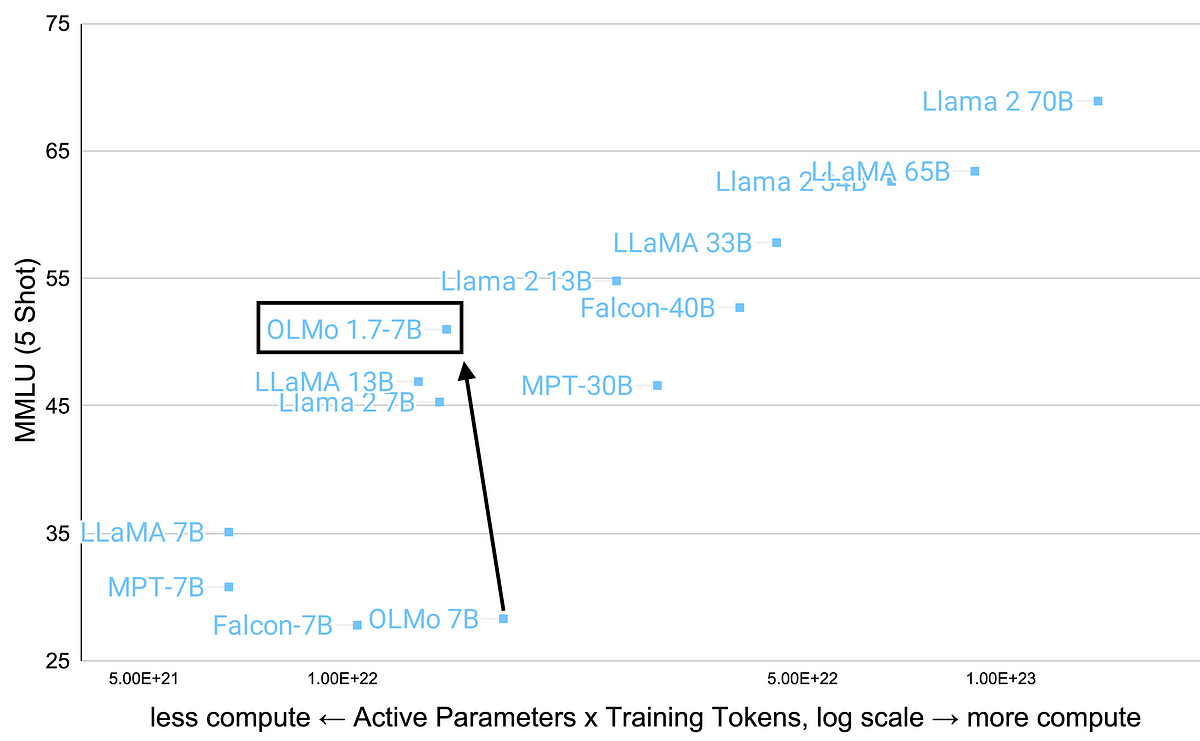

OLMo 1.7–7B: A 24 point improvement on MMLU

Today, we’ve released an updated version of our 7 billion parameter Open Language Model, OLMo 1.7–7B. This model scores 52 on MMLU, sitting above Llama 2–7B and approaching Llama 2–13B, and outperforms Llama 2–13B on GSM8K (see below).

OLMo 1.7–7B — created on the path towards our upcoming 70 billion parameter model — showcases a longer context length, up from 2048 to 4096 tokens. It exhibits higher benchmark performance due to a combination of improved data quality, a new two-stage training procedure, and architectural improvements. Get the model on HuggingFace here, licensed with Apache 2.0. The training data, Dolma 1.7, is licensed under ODC-BY, as recently announced.

Since the release of OLMo 1.0, we’ve been focusing on improving a few key evaluation metrics, such as MMLU. Below is a plot showing the approximate compute used to train some language models with open weights, calculated as the product of model parameter count and training dataset size in tokens. We can see that OLMo 1.7–7B gains substantially on compute efficiency per performance relative to peers such as the Llama suite and Mosaic Pretrained Transformer (MPT).

Alongside OLMo 1.7–7B, we are releasing an updated version of our dataset, Dolma 1.7 — in which we focused on (a) exploring more diverse sources of data, and (b) more precise filtering of web sources. The sources are included below, sorted by number of tokens.

.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/23437369/acastro_220504_STK121_0001.jpg)