Space-efficient indexing for immutable log data

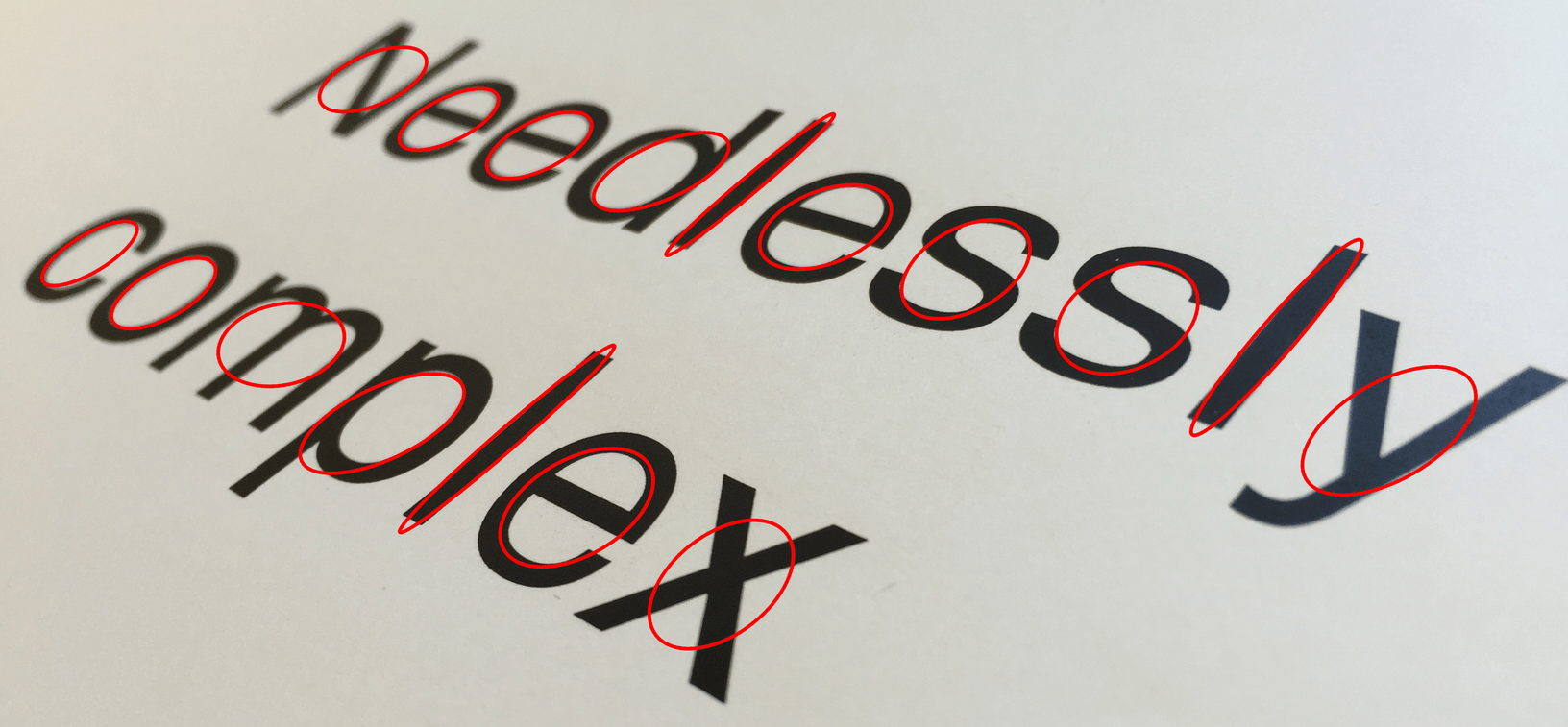

This post describes our approach to implementing a disk-backed hashmap in Rust for indexing high-cardinality predicates in our diagnostics product, Seq. If you've ever wondered how a hashmap works, or what makes a disk-backed datastructure different from an in-memory one, you might find it interesting.

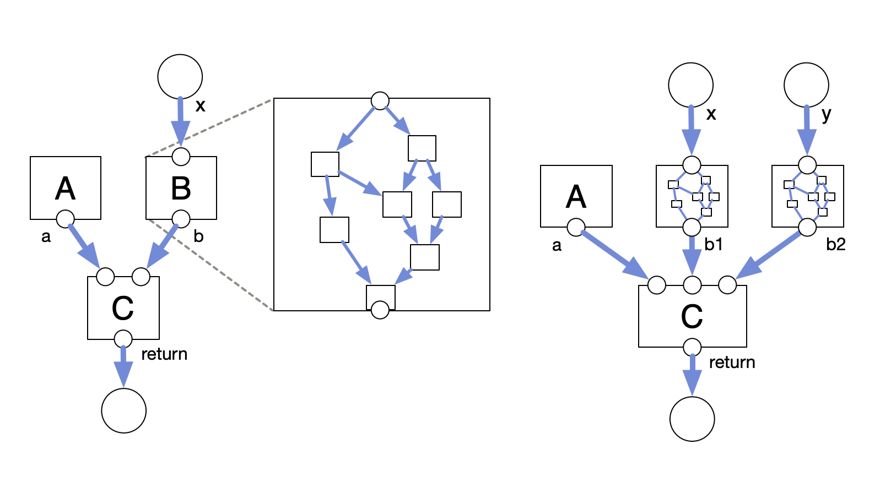

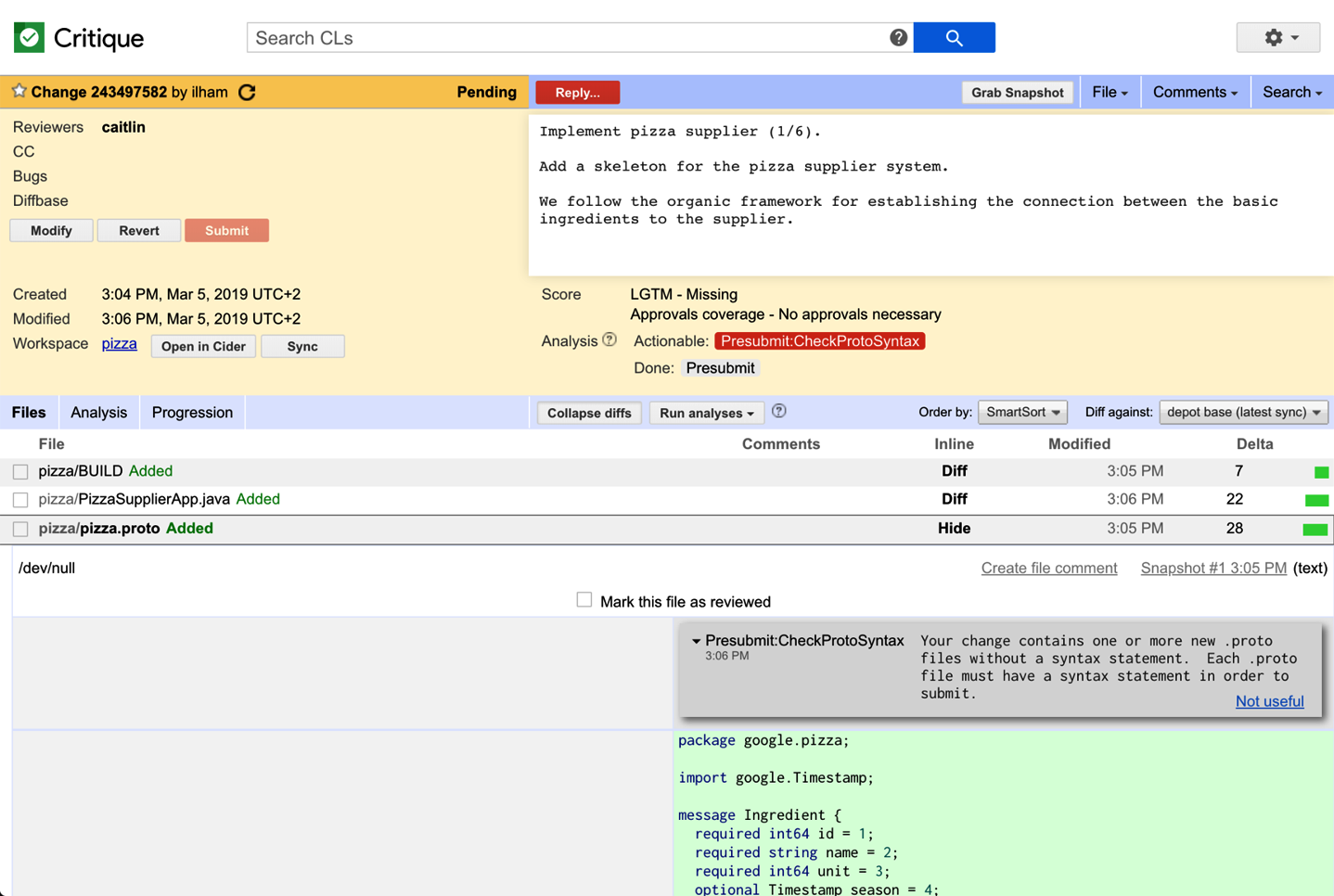

Seq is a database for diagnostic events. It's designed to store large volumes of immutable event records with limited ingestion overhead. Records are initially ingested into unordered write-ahead-logs based on their timestamp. After some grace period has passed, multiple logs are sorted and coalesced into a single file covering some larger period of time. The start of every 4Kb page in that coalesced file is marked with a header pointing back to the start of the record that crosses its boundary. This scheme makes it possible to pick any random page in the file and hop forwards or backwards to the nearest record.

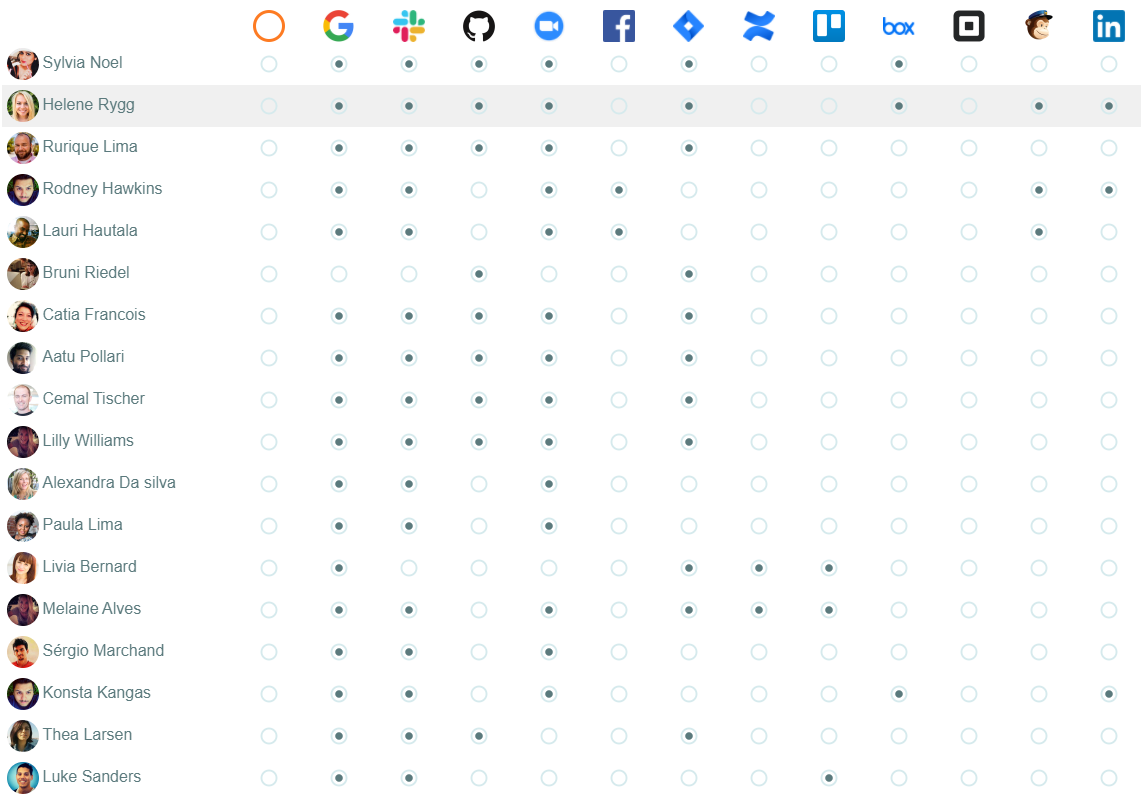

Like many other database systems, Seq maintains indexes to reduce the IO needed to serve a given query. Indexes are computed in the background after write-ahead-logs are coalesced. They store the set of pages in the coalesced file that contain the start of records matching some predicate in the query. Indexes can be treated like a bitmap, with bitwise union and intersection combining multiple indexes together. This page-based indexing scheme trades some query time for storage space. Diagnostic data is already high-volume, so we want to keep Seq's indexing storage requirements at just a few percent of the underlying data. Working at the page-level instead of the record-level means they store less data, but produce false-positives during querying. This is accounted for upstream by re-evaluating the query over all records in the read pages.