Confidential multi-stakeholder AI

To run AI workloads at scale, enterprises are increasingly relying on the public cloud. In this context, data security and privacy can become key concerns. In essence, today, companies need to trust the cloud service provider (CSP) with their data and AI-related intellectual property.

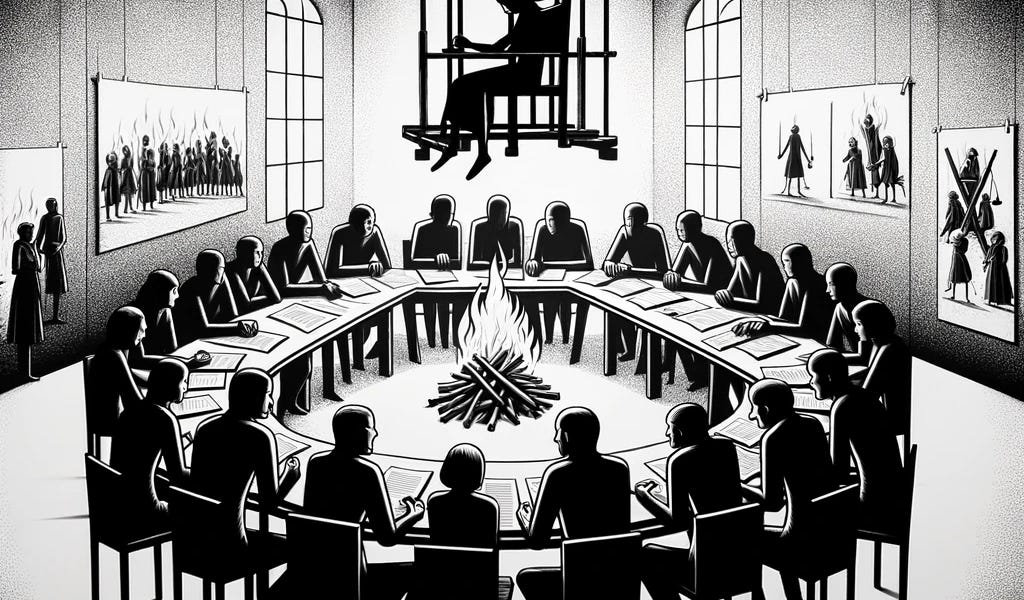

And there are also other parties to trust, as AI services typically have several stakeholders. Consider for example the schematic AI pipeline below: the AI model, the training data, and both the code for training and inference are often provided by different stakeholders. All these stakeholders must trust each other and the CSP with their valuable (and possibly regulated) data or intellectual property.

Confidential computing is a key technology that can solve these fundamental problems, as it enables the always-encrypted and verifiable processing of data. With it, businesses can collaborate and rely on the public cloud without losing control of their data or intellectual property. For more information refer to our post “Confidential Computing — Basics, Benefits and Use Cases”.

In this post, we will walk through the process of setting up a confidential AI inference service. We’ll showcase a multi-stakeholder scenario including the CSP, model owner, inference service provider, and users.