CLIP: Death of the Class Map

Earlier this year, OpenAI released CLIP, and showed that scaling a simple image-caption alignment objective on large-scale internet data enabled impressive zero-shot transfer on a myriad of downstream tasks like classification, OCR and video action recognition.

Unfortunately, CLIP was largely overshadowed by its sibling DALL·E’s release, which I think is a tragedy given how seismic CLIP’s repercussions are going to be on the field, especially in Computer Vision. In fact, CLIP, to me, is going to be synonymous with the death of class maps when we look back in a few years. Ironically, you could say DALL·E was a red herring, as CLIP has since been creatively combined with SOTA generative models to produce some really impressive language-conditioned models.

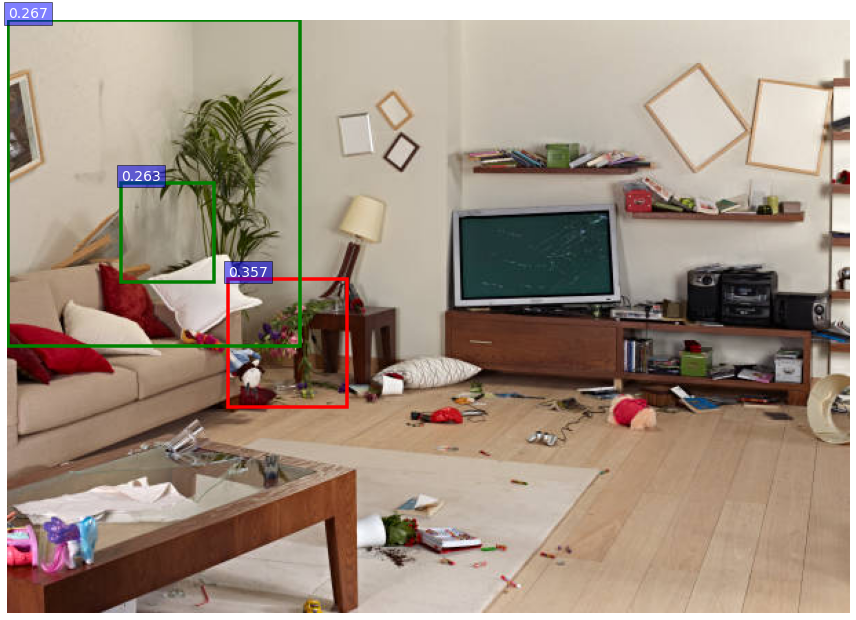

In this post, I want to highlight the versatility of CLIP by demonstrating its zero-shot capabilities on various tasks, such as reCAPTCHA solving and text prompted detection. I’ve provided a Colab notebook with every example so you can feed in your own data and mess with CLIP to your heart’s desire. If you come up with other creative ways of using CLIP, feel free to submit a PR to the evergrowing playground on GitHub!