Benchmarks in CI: Escaping the Cloud Chaos

Creating a performance gate in a CI environment, preventing significant performance regressions from being deployed has been a long-standing goal of dozens of software teams. But measuring in hosted CI runners is a particularly challenging task, mostly because of noisy neighbors leaking through virtualization layers.

Still, it's worth the effort. Performance regressions are harder to catch and more expensive to fix the longer they go unnoticed. Mostly because:

Let's measure this noise by using various benchmarking suites from popular performance-focused open-source projects: next.js/turbopack by Vercel, ruff and uv by Astral, and reflex by Reflex.

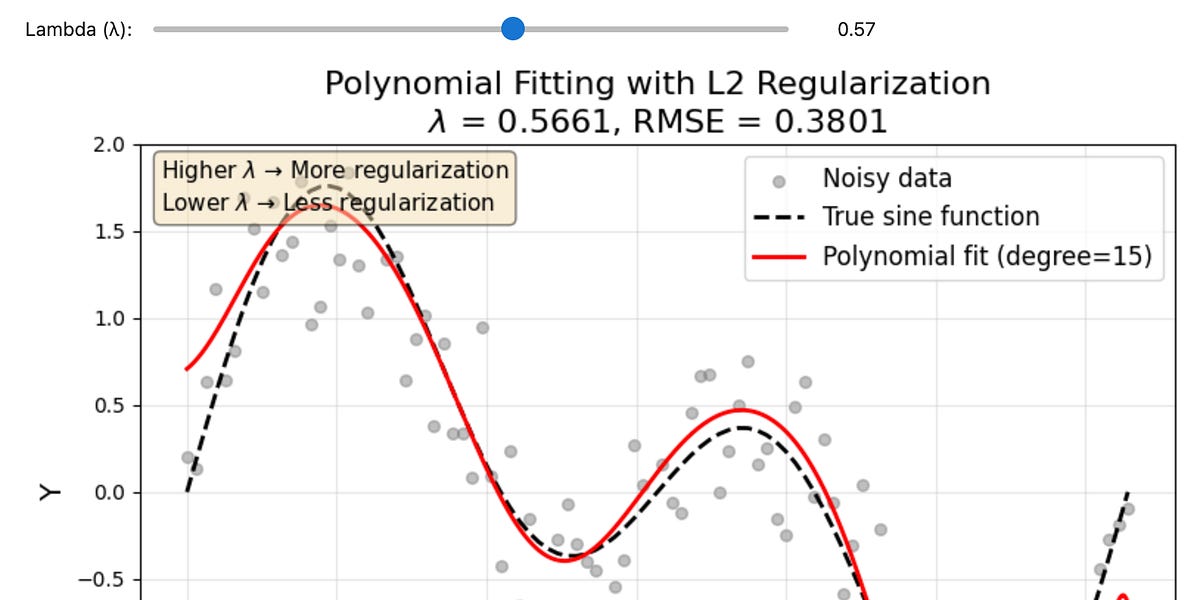

To measure consistency, we'll use the coefficient of variation which is the standard deviation divided by the mean. This metric is useful since it helps expressing and comparing the relative dispersion of the results.

Each run is executed on a different machine, simulating real CI conditions. Within each run, each result is the outcome of multiple executions of the same benchmark, which is done by benchmarking framework in use.