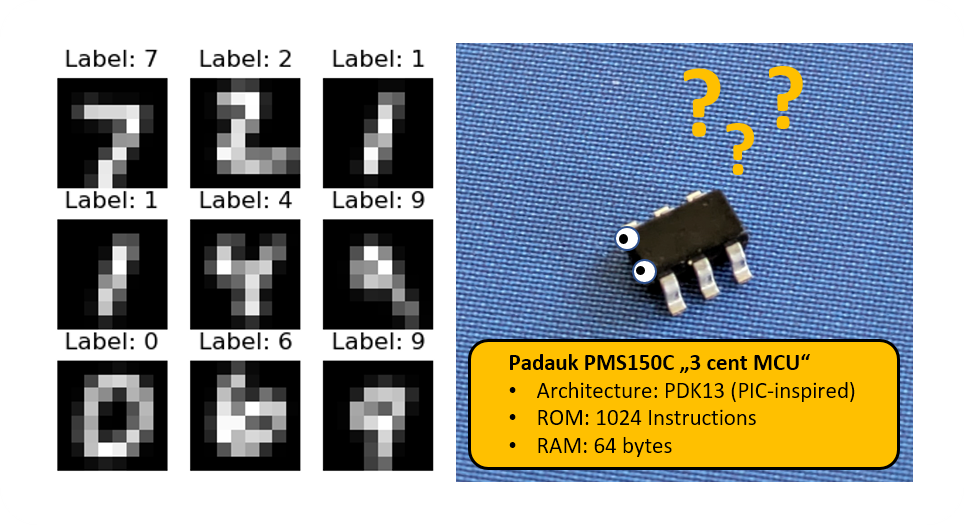

Neural Networks (MNIST inference) on the “3-cent” Microcontroller

Bouyed by the surprisingly good performance of neural networks with quantization aware training on the CH32V003, I wondered how far this can be pushed. How much can we compress a neural network while still achieving good test accuracy on the MNIST dataset? When it comes to absolutely low-end microcontrollers, there is hardly a more compelling target than the Padauk 8-bit microcontrollers. These are microcontrollers optimized for the simplest and lowest cost applications there are. The smallest device of the portfolio, the PMS150C, sports 1024 13-bit word one-time-programmable memory and 64 bytes of ram, more than an order of magnitude smaller than the CH32V003. In addition, it has a proprieteray accumulator based 8-bit architecture, as opposed to a much more powerful RISC-V instruction set.

On the CH32V003 I used MNIST samples that were downscaled from 28×28 to 16×16, so that every sample take 256 bytes of storage. This is quite acceptable if there is 16kb of flash available, but with only 1 kword of rom, this is too much. Therefore I started with downscaling the dataset to 8×8 pixels.