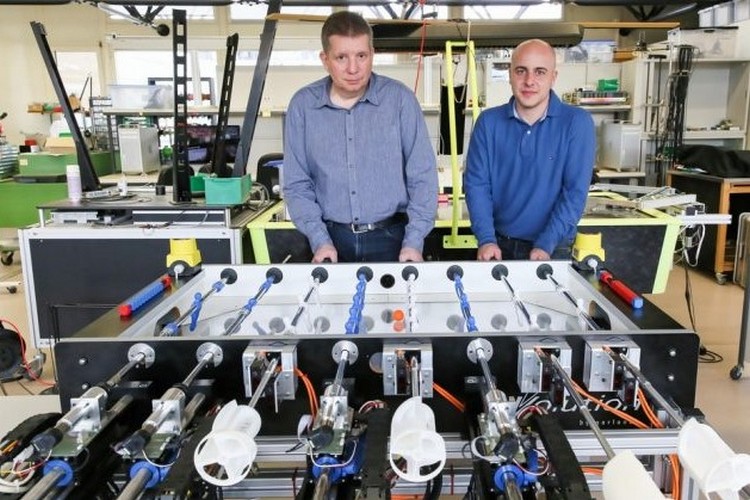

Can LLMs Make Robots Smarter?

Advances in robotics have arrived at a fast and furious pace. Yet, for all their remarkable capabilities, these machines remain fairy dumb. They are unable to understand all but a few human commands, they cannot adapt to conditions that fall outside their rigid programming, and they are unable to adjust to events on the fly.

These challenges are prompting researchers to explore ways to build large language models (LLMs) into robotic devices. These semantic frameworks, which would serve as something resembling a “brain,” could imbue robotics with conversational skills, better reasoning, and an ability to process complex commands—whether a request to prepare an omelet or tend to a patient in a care facility.

“Adding LLMs could fundamentally change the way robots operate and how humans interact with them,” said Anirudha Majumdar, an associate professor in the Department of Mechanical and Aerospace Engineering at Princeton University. “An ability to process open-ended instructions via natural language has been a grand ambition for decades. It is now becoming feasible.”

The ultimate goal is to develop “agentic computing” systems that use LLMs to power robots through complex scenarios that require numerous steps. Yet, developing these more advanced robots is fraught with obstacles. For one thing, GPT and other models lack grounding—the context required to address real-world situations. For another, AI is subject to errors and fabrications, also known as hallucinations. This could lead to unexpected and even disastrous outcomes—including unintentionally injuring or killing humans.

:focal(1800x1210:0x0)/cloudfront-us-east-2.images.arcpublishing.com/reuters/MYYE4IDMZVLXVLDZRNOVBQGCAU.jpg)