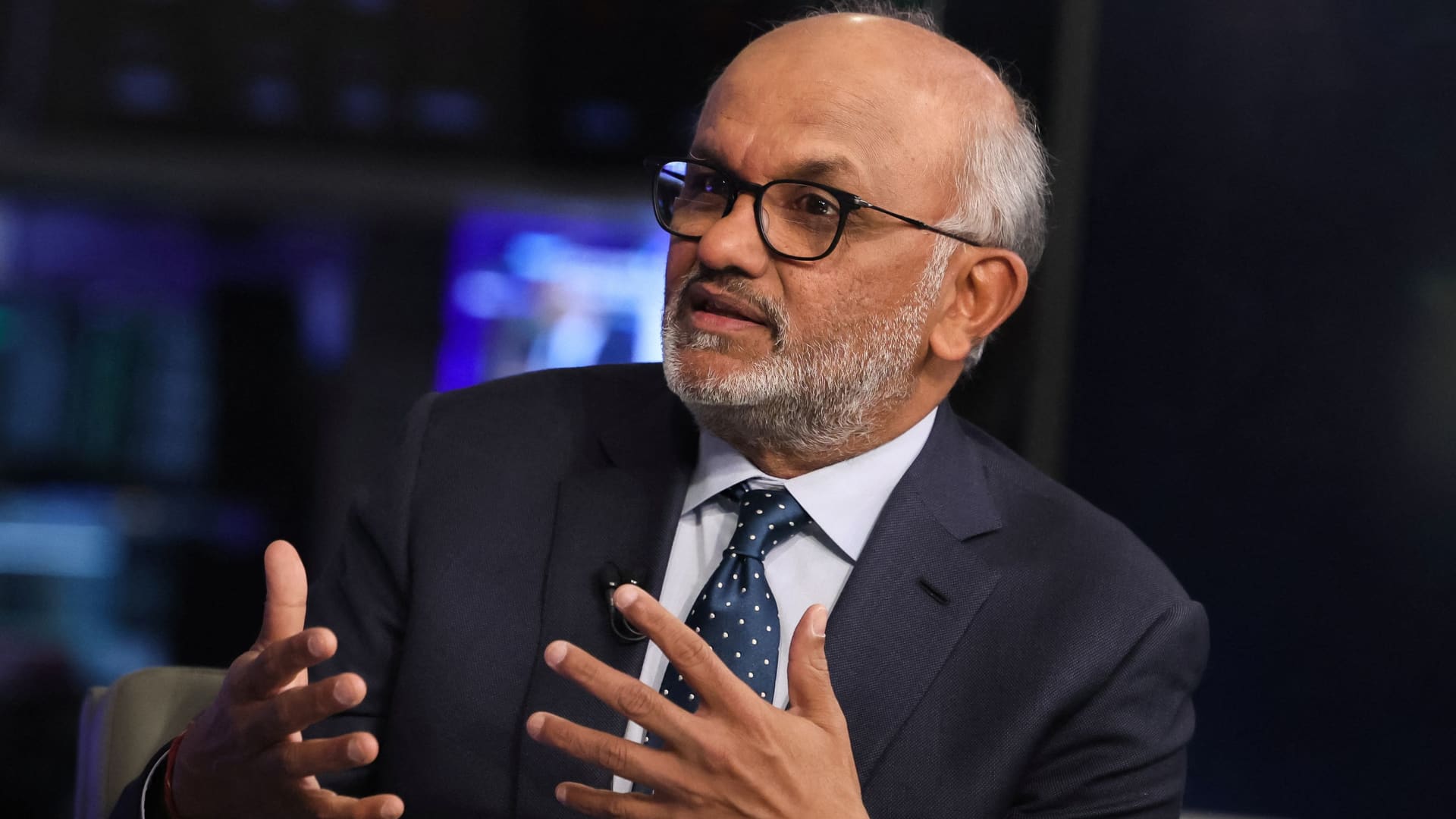

Cerebras Demonstrates Trillion Parameter Model Training on a Single CS-3 System

SUNNYVALE, CA AND VANCOUVER — December 10, 2024 – Today at NeurIPS 2024, Cerebras Systems , the pioneer in accelerating generative AI, today announced a groundbreaking achievement in collaboration with Sandia National Laboratories: successfully demonstrating training of a 1 trillion parameter AI model on a single CS-3 system. Trillion parameter models represent the state of the art in today’s LLMs, requiring thousands of GPUs and dozens of hardware experts to perform. By leveraging Cerebras’ Wafer Scale Cluster technology, researchers at Sandia were able to initiate training on a single AI accelerator – a one-of-a-kind achievement for frontier model development.

“Traditionally, training a model of this scale would require thousands of GPUs, significant infrastructure complexity, and a team of AI infrastructure experts,” said Sandia researcher Siva Rajamanickam. “With the Cerebras CS-3, the team was able to achieve this feat on a single system with no changes to model or infrastructure code. The model was then scaled up seamlessly to 16 CS-3 systems, demonstrating a step-change in the linear scalability and performance of large AI models, thanks to the Cerebras Wafer-Scale Cluster.”

Trillion parameter models require terabytes of memory — thousands of times more than what’s available on a single GPU. Thousands of GPUs must be procured and connected before being able to run a single training step or model experiment. Cerebras Wafer Scale Cluster uses unique, terabyte-scale external memory device called MemoryX to store model weights, making trillion parameter models as easy to train as a small model on a GPU.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25788286/1231496630.jpg)