5 ways to reduce the risk and impact of LLM hallucinations | Chris Lovejoy

Control of output is high if the model if the LLM output is constrained. Eg. the LLM must give one of three different discrete options.

I currently work at Anterior, a $95m start-up using LLMs to automate decisions in healthcare administration. We made the deliberate choice to operate in a space where those checks-and-balances already exist.

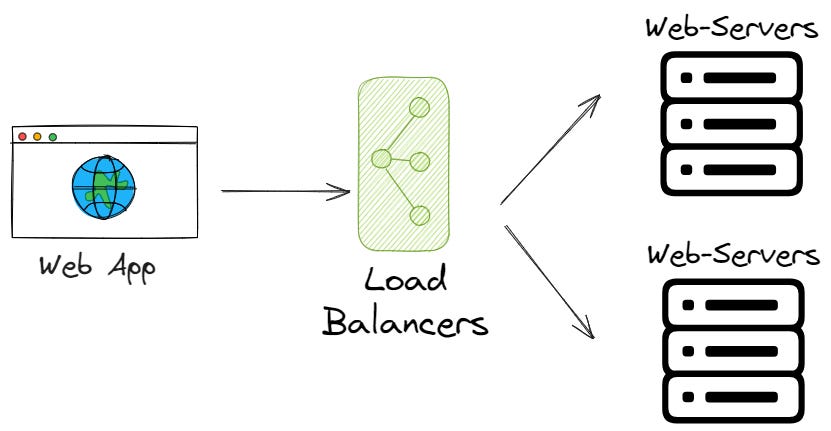

The existing flow of prior authorisation: when administrative staff or nurse thinks there isn’t enough evidence to support a treatment decision, it gets escalated to a doctor.

AI neatly slots in so now its: If AI pipeline thinks there isn’t enough evidence to support a treatment decision, it gets escalated to a doctor.

In my experience, LLMs are mostly likely to hallucinate when they’re stretched by what they’re being asked to do. Ie. when the task is at the limit of their capabilities.

This also helps you understand what steps the model struggles with. You can monitor this by examining the inputs and outputs of each step for a bunch of examples.