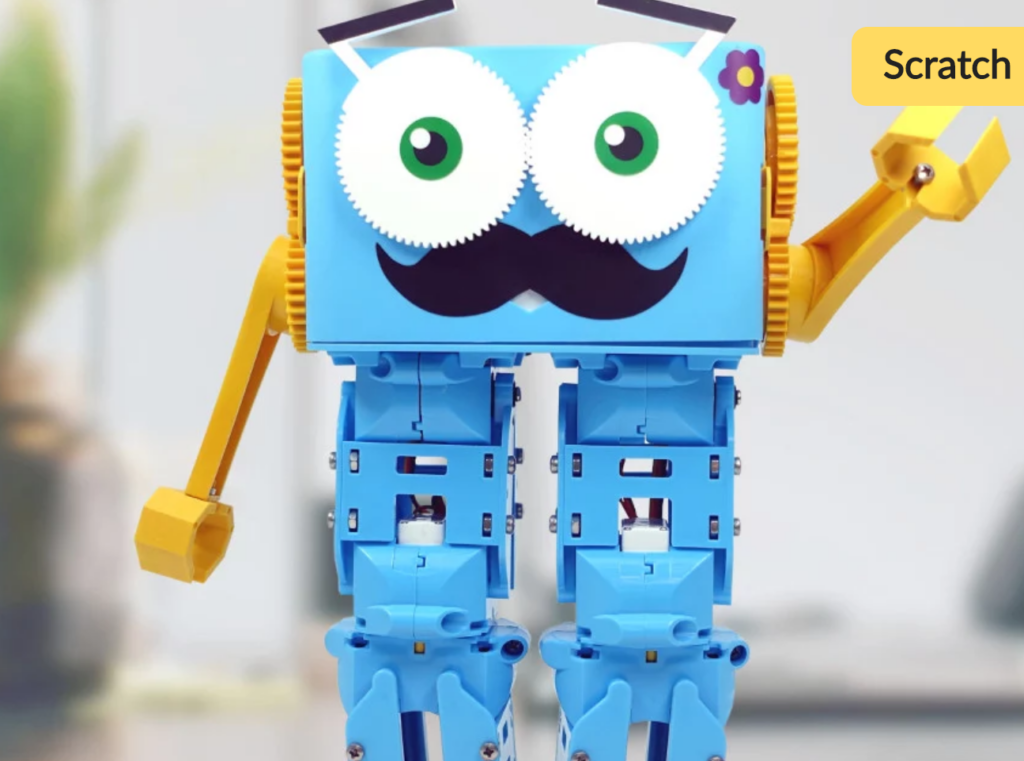

Meet Grasso the Yard Robot

I, like many other people on the planet, have been following along with the recent development of Large Language Models (LLMs) like ChatGPT and friends, and I thought it seemed like a good time to try something fun. I’ve always liked the idea of ‘independent’ robots – think Bender from Futurama, not some sad robot always talking about its creator, or questioning its existence. I got a Lego Mindstorms kit for Christmas about 25 years ago, and the first thing I did was build a robot ‘hamster’ that just hung out and wandered around a little pen in my room. There’s also been a ton of great scifi written from the perspective of robots that’s come out recently (go to your library and get something by Anne Leckie or Becky Chambers!). So with those very vague ideas for inspiration, what can we do?

Who says AGI has to be super intelligent just to be A, G and I? Grasso is driven by a kind of python ‘madlib’ wrapped around two LLMs (one multi-modal, one text-only). The outer loop takes a photo with its webcam and feeds it into a multi-modal LLM to generate a scene description. That scene description is then inserted into a prompt (“This is what you currently see with your robot eyes…”) that ends with “Choose your next action” and presents a list of actions the robot can take, some of which are ‘direct’ commands, and others that are ‘open ended’ and let Grasso finish the action prompt however it chooses. I wanted Grasso to be entirely ‘local’ (it’s ultimately meant to live off of solar power in my yard, after all!), which puts a lot of limitations on what I can get away with. A 4k token context limit (and the underpowered CPU running things) means I needed to get creative. A core part of Grasso is that it’s ‘stateful’ – the prompt incorporates both its most recent two actions, as well as a bank of 6 ‘core memories’ that Grasso can choose to update.