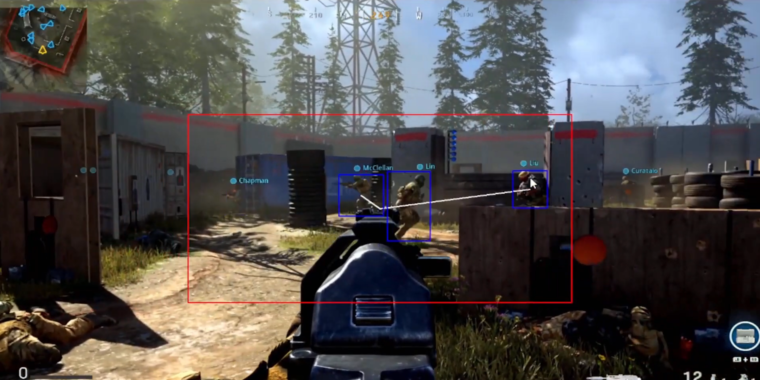

YOLOv3: Sparsifying to Improve Object Detection Performance¶

YOLOv3: Sparsifying to Improve Object Detection Performance¶ Neural Magic creates models and recipes that allow anyone to plug in their data and leverage SparseML’s recipe-driven approach on top of Ultralytics’ robust training pipelines for the popular YOLOv3 object detection network. Sparsifying involves removing redundant information from neural networks using algorithms such as pruning and quantization, among others. This sparsification process results in faster inference and smaller file sizes for deployments. This page walks through the following use cases for trying out the sparsified YOLOv3 models: Run the models for inference in deployment or applications Train the models on new datasets Download the models from the SparseZoo Benchmark the models in the DeepSparse Engine Video not loading? View full video here. Run¶ Example Application Example Deployment Train¶ Applying a Recipe Download¶ Table not loading? View full table here. The model stubs from the above table can be used with the SparseZoo Python API for downloading: from sparsezoo.models import Zoo # change out the stub variable from the above table to download the desired model stub = "zoo:cv/detection/yolo_v3-spp/pytorch/ultralytics/coco/pruned_quant-aggressive_94" model = Zoo . download_model_from_stub ( stub , override_parent_path = "downloads" ) # Prints the download path of the model print ( model . dir_path ) Benchmark¶ Batch size 1 performance comparisons for YOLOv3 on common deployments. Click the chart or here for more detailed information. Benchmarks within the DeepSparse Engine can be run by using the appropriate stub for each model with the following code: from sparsezoo.models import Zoo from deepsparse import compile_model batch_size = 1 stub = "zoo:cv/detection/yolo_v3-spp/pytorch/ultralytics/coco/pruned_quant-aggressive_94" # Download model and compile as optimized executable for your machine model = Zoo . download_model_from_stub ( stub , override_parent_path = "downloads" ) engine = compile_model ( model , batch_size = batch_size ) # Fetch sample input and run a benchmark inputs = model . data_inputs . sample_batch ( batch_size = batch_size ) benchmarks = engine . benchmark ( inputs ) print ( benchmarks )

Download¶ Table not loading? View full table here. The model stubs from the above table can be used with the SparseZoo Python API for downloading: from sparsezoo.models import Zoo # change out the stub variable from the above table to download the desired model stub = "zoo:cv/detection/yolo_v3-spp/pytorch/ultralytics/coco/pruned_quant-aggressive_94" model = Zoo . download_model_from_stub ( stub , override_parent_path = "downloads" ) # Prints the download path of the model print ( model . dir_path )

:quality(75)/https%3A%2F%2Fdev.lareviewofbooks.org%2Fwp-content%2Fuploads%2F2024%2F04%2FThe-Blind-Spot.jpeg)