Quantifying the algorithmic improvement from reasoning models

Almost a year ago, OpenAI introduced o1, the world’s first “reasoning model”. Compared to its likely predecessor GPT-4o, o1 is more heavily optimized to do multi-step reasoning when solving problems. So it’s perhaps no surprise that it does much better on common math and science benchmarks.

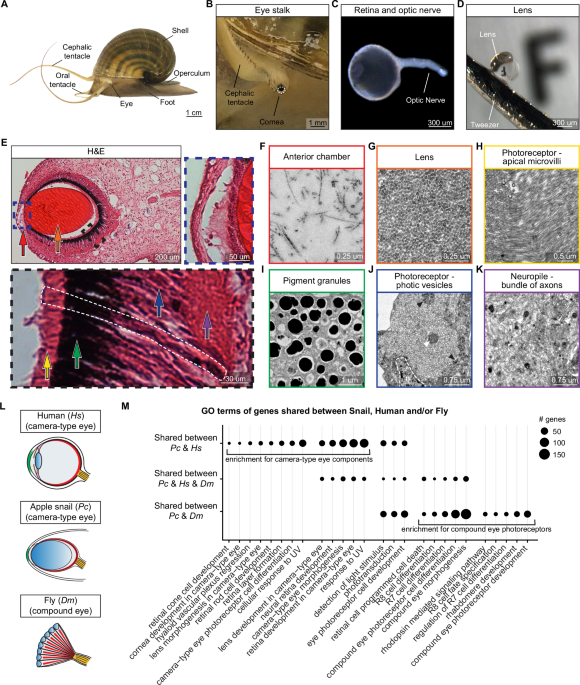

o1 performs far better than GPT-4o on GPQA diamond (PhD-level multiple-choice science questions) and MATH level 5 (high-school math competition problems).1 Data is taken from Epoch AI’s benchmarking hub.

By itself, this performance improvement was already a big deal. But what’s even more important was how it was achieved: This wasn’t achieved by using a lot more training compute. Instead, this was the byproduct of a major algorithmic innovation. o1 went through a period of “reasoning training”, where its chain-of-thought was fine-tuned on reasoning traces and optimized using reinforcement learning. This allows the model to spend more time “reasoning” before responding to user queries.

But how can we quantify the importance of this algorithmic innovation? One way to do this is to interpret its importance in terms of a hypothetical increase in training compute. Specifically, we exploit the empirically robust relationship that more pre-training compute leads to better benchmark performance. This relationship allows us to ask a key question: how much more pre-training compute would GPT-4o have needed to match o1’s performance?2 We call this the “compute-equivalent gain” (CEG), and it is a standard way of quantifying the contribution from algorithmic innovations.