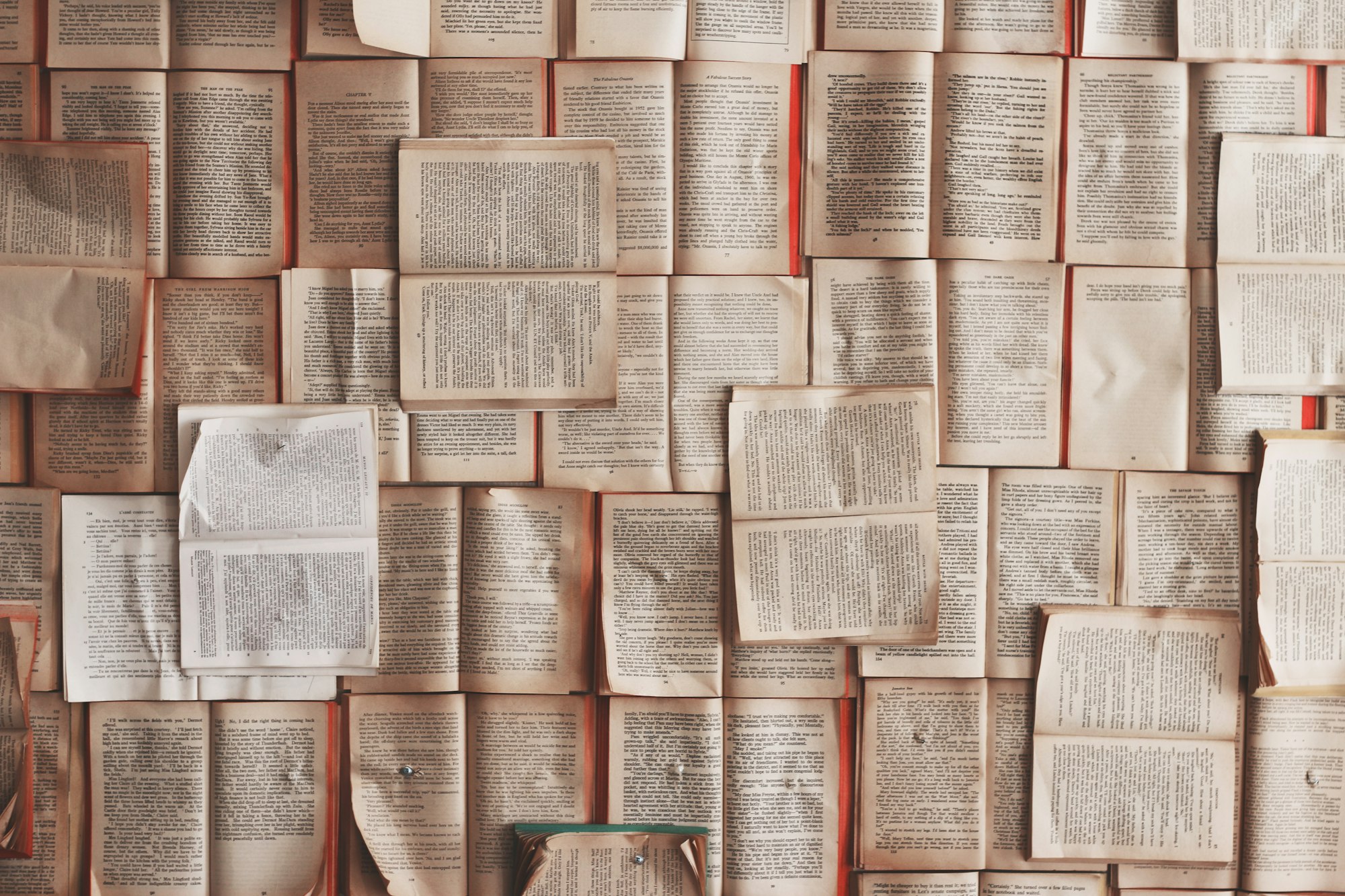

How to ensure data quality in the era of Big Data

The era of Big Data- A little over a decade has passed since The Economist warned us that we would soon be drowning in data. The modern data stack has emerged as a proposed life-jacket for this data flood — spearheaded by Silicon Valley startups such as Snowflake, Databricks and Confluent.

Today, any entrepreneur can sign up for BigQuery or Snowflake and have a data solution that can scale with their business in a matter of hours. The emergence of cheap, flexible and scalable data storage solutions was largely a response to changing needs spurred by the massive explosion of data.

Currently, the world produces 2.5 quintillion bytes of data daily (there are 18 zeros in a quintillion). The explosion of data continues in the roaring ‘20s, both in terms of generation and storage — the amount of stored data is expected to continue to double at least every four years. However, one integral part of modern data infrastructure still lacks solutions suitable for the Big Data era and its challenges: Monitoring of data quality and data validation.

In 2005, Tim O’Reilly published his groundbreaking article “What is Web 2.0?”, truly setting off the Big Data race. The same year, Roger Mougalas from O’Reilly introduced the term “Big Data” in its modern context — referring to a large set of data that is virtually impossible to manage and process using traditional BI tools.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25378907/STK088_SPOTIFY_CVIRGINIA_C.jpg)